- sales/support

Google Chat:---

- sales

+86-0755-88291180

- sales01

sales@spotpear.com

- sales02

dragon_manager@163.com

- support

tech-support@spotpear.com

- CEO-Complaints

zhoujie@spotpear.com

- sales/support

WhatsApp:13246739196

Jetson Nano JETSON-NANO-LITE-DEV-KIT User Guide

Notice

If you buy the DEV KIT from Waveshare, we have flashed a Jetpack 4.6 OS to the emmc of the Jetson Nano and enabled the SDMM3 (for SD card). If you need to modify the SD card startup, please refer to the manual to modify the startup path.

If you have special requirements for the factory image version, please contact the customer service of the Waveshare shop to communicate and confirm.

Introduction

JETSON NANO DEV KIT made by Waveshare, based on AI computers Jetson Nano and Jetson Xavier NX, provides almost the same IOs, size, and thickness as the Jetson Nano Developer Kit (B01), more convenient for upgrading the core module. By utilizing the power of the core module, it is qualified for fields like image classification, object detection, segmentation, speech processing, etc., and can be used in sorts of AI projects.

- Compared with the official B01 kit, JETSON-NANO-DEV-KIT cancels the TF card slot on the Nano core board and adds a 16GB eMMC memory chip, which is stable in reading and writing.

- The header switch of the DC power supply has been canceled, which means that we can directly insert the DC power supply into the circular interface and do not need to use a jumper cap to short the J48 interface first.

- Waceshare JETSON-NANO-DEV-KIT not only supports USB flash drive booting but also supports TF card booting as it has integrated a TF card slot on the expansion board. The reading and writing are stable, and it is almost the same as the B01 official core board with a TF card slot.

Compared with the conventional kit, JETSON-NANO-LITE-DEV-KIT simplifies the interface of the carrier board, the USB3.0 port is reduced from the original 4 to 1, and 2x USB2.0 ports are used instead, and the CSI port is changed from the original in addition, the carrier board of the Lite version also adds power and reset buttons. The carrier board of the Lite version is compatible with the original official Jetson Nano 2GB Developer Kit in terms of appearance and interface. It is suitable for users who do not require more interface resources. The core board of the Lite version also uses the Jetson Nano Module 4GB version.

- JETSON-NANO-LITE-DEV-KIT also has a TF card slot, which is convenient for users to do TF card expansion.

- Compared with JETSON-NANO-DEV-KIT, the Lite version kit has two more onboard buttons, which are used to control the mainboard power supply and system reset respectively.

- The carrier board of JETSON-NANO-LITE-DEV-KIT is basically compatible with the official Jetson Nano 2GB Developer Kit, which is suitable for users who do not require many interfaces of the carrier boards but want the performance of 4GB Nano modules.

Jetson Nano Module Parameter

| GPU | NVIDIA Maxwell™ architecture with 128 NVIDIA CUDA® cores and 0.5 TFLOPS (FP16) |

|---|---|

| CPU | Quad-core ARM® Cortex®-A57 MPCore processor |

| Memory | 4 GB 64-bit LPDDR4 1600 MHz – 25.6 GB/s |

| Storage | 16 GB eMMC 5.1 Flash Storage |

| Video Encode | 250 MP/s 1 x 4K @ 30 (HEVC) |

| Video Decode | 500 MP/s 1 x 4K @ 60 (HEVC) |

| Camera | 12-ch (3x4 or 4x2) MIPI CSI-2 D-PHY 1.1 (18 Gbps) |

| Connectivity | Wi-Fi requires external chip 10/100/1000 BASE-T Ethernet |

| Display | EDP 1.4 | DSI (1 x 2) 2 simultaneous |

| UPHY | 1 x 1/2/4 PCIe, 1 x USB 3.0, 3 x USB 2.0 |

| IO | 3 x UART, 2 x SPI, 2 x I2S, 4 x I2C, multi GPIO headers |

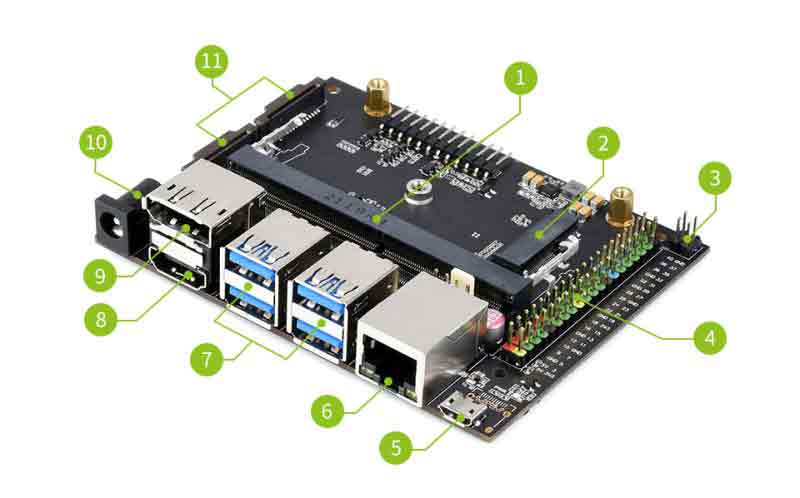

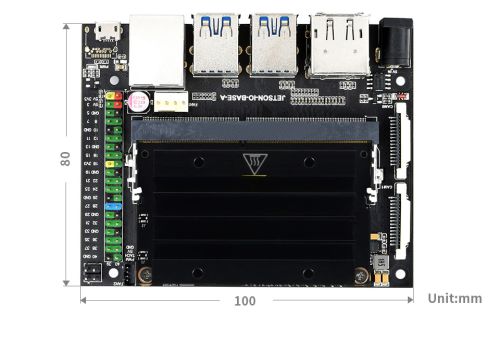

JETSON-IO-BASE-A Onboard Resources

- Core module socket: for Jetson Nano module

- Micro SD card slot: for external SD card

- M.2 Key E connector: for AC8265 wireless network card

- PoE pins: PoE module is not included

- 40PIN GPIO header: compatible with Raspberry Pi pins, convenient for Raspberry Pi peripherals (program support is required.)

- Micro USB port: for 5V power input or for USB data transmission

- Gigabit Ethernet port: 10/100/1000Base-T auto-negotiation, supports PoE if external PoE module is connected.

- 4 x USB 3.0 port

- HDMI output port

- DisplayPort connector

- DC jack: for 5V power input

- 2 x MIPI CSI camera connectors

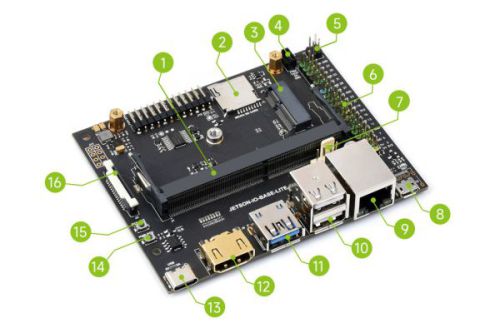

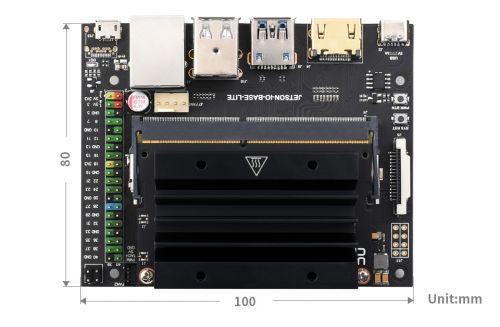

JETSON-IO-BASE-LITE Onboard Resources

- Core module socket

- TF card slot

- M.2 Key E interface

- 1.25mm fan header

- PoE pin

- PoE module is not included

- 40PIN GPIO header

- 2.54mm fan header

- Micro USB interface

- User USB data transfer/system programming

- Gigabit Ethernet port

- 10/100/1000Base-T self-adaptive, access to PoE module can support PoE power supply

- 2x USB 2.0 ports

- USB 3.2 Gen1 port

- HDMI high-definition interface

- USB Type-C port

- For 5V 3A power input

- PWR BTN

- Power button

- SYS RST

- Reset button

- MIPI CSI camera connector

Dimension

- JETSON-NANO-DEV-KIT

- JETSON-NANO-LITE-DEV-KIT

User Guide

System Installation

System Environment & EMMC System Programming

- Programming system requires the Ubuntu 18.04 host or virtual machine and adopts the SDK Manager tool to program.

- The system environment in this manual adopts VMware 16 virtual machine to install Ubuntu 18.04 system, only for learning.

- If you have an Ubuntu virtual machine or host but not 18.04 and can accept formatted SD cards or USB flash drives, please refer to #Method Two: Directly Download Jetpack.

Method One: Adopt SDK Manager Tool

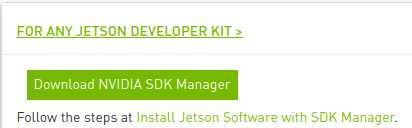

- Download SDK Manager: open the browser and enter the URL, click to download SDK Manager.

https://developer.nvidia.com/zh-cn/embedded/jetpack

You need to register an account. After logging in, we can download it successfully. If you don't know how to register, you can refer to NVIDIA-acess - After the download is complete, we enter the download path "Downloads" to install, and input the following content in the terminal:

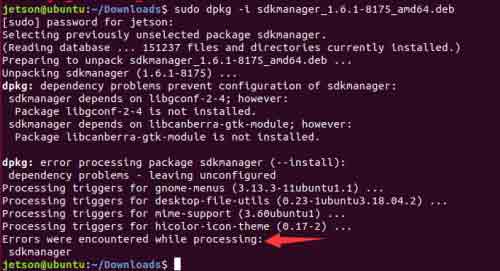

sudo dpkg -i sdkmanager_1.6.1-8175_amd64.deb (enter according to your own version).

- After the installation is complete, the system may report an error that the dependency files cannot be found. Enter the following command to solve this problem.

sudo apt --fix-broken install

- Open the Ubuntu computer terminal and run the SDK Manager to open the software.

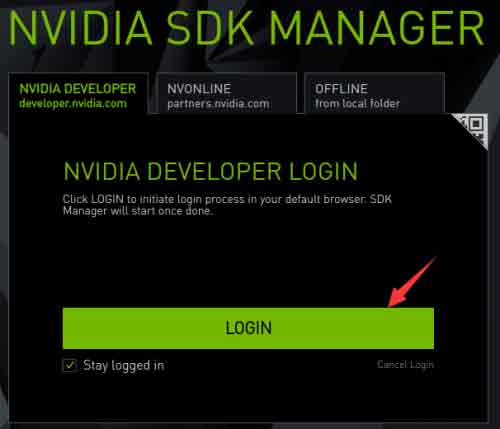

- Click LOGIN, log in to the NVIDIA account, and a link will pop up in the browser, enter the previous registered email and password to log in.

- At this point, we have successfully logged in to the SDK Manager.

Install image on EMMC

- Jetson Nano board

- Ubuntu 18.04 virtual machine (or host computer)

- 5V/4A power adapter

- Jumper caps (or Dupont wire)

- USB data cable (Micro USB port, can transfer data)

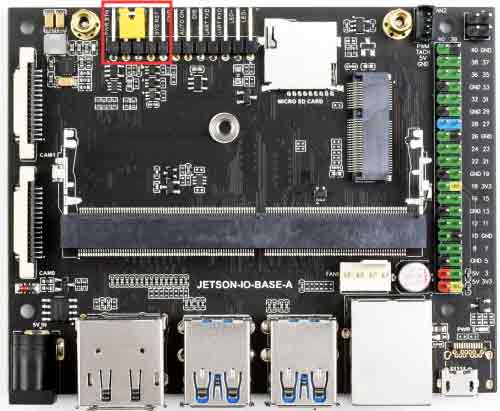

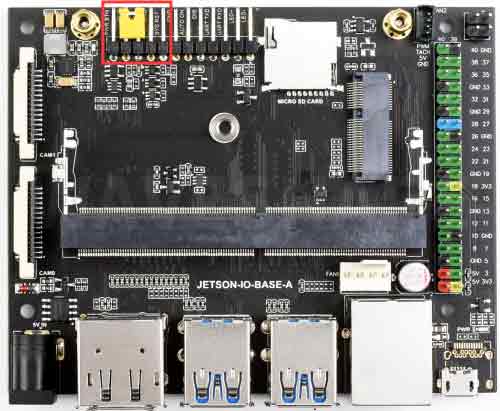

- Use jumper caps or Dupont wires to short-circuit the FC REC and GND pins, as shown in the figure below, at the bottom of the core board.

- Connect the DC power supply to the circular power port and wait a moment.

- Connect the Micro USB port of the Jetson Nano to the Ubuntu host with a USB cable (note that it is a data cable).

- Open the Ubuntu computer terminal and run the SDK Manager to open the software.

- Using a virtual machine requires setting up the device to connect to the virtual machine.

- Log in to your account, if the Jetson Nano is recognized normally, SDK Manager will detect and prompt options.

Equipment Preparation

Hardware configuration (enter recovery mode)

System Programming

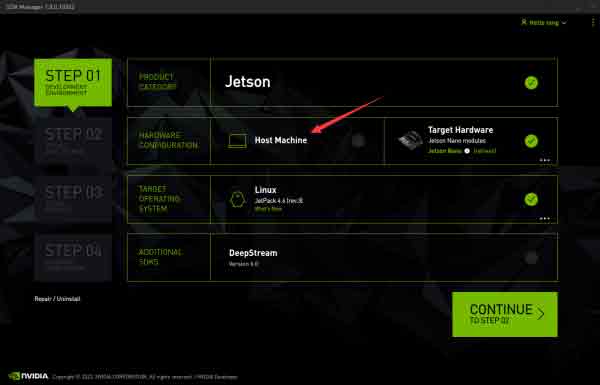

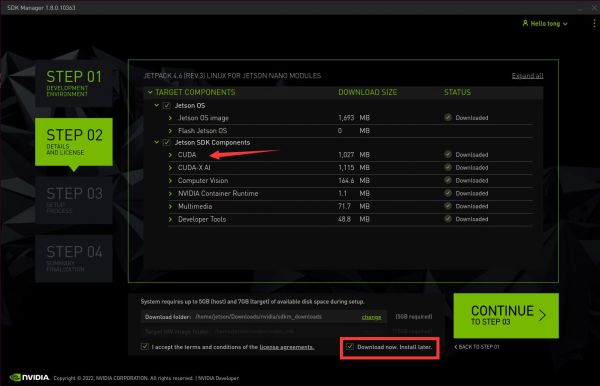

- In the JetPack option, take the JetPack4.6 system as an example, uncheck Host Machine and click CONTINUE.

- Select Jetson OS, and 'remove the option of Jetson SDK Components. Check the protocol and click CONTINUE.

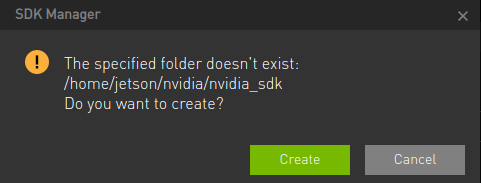

Note: Checking both will cause the download to fail. - The path is saved by HW Imager by default. Select Create and the path will be created automatically.

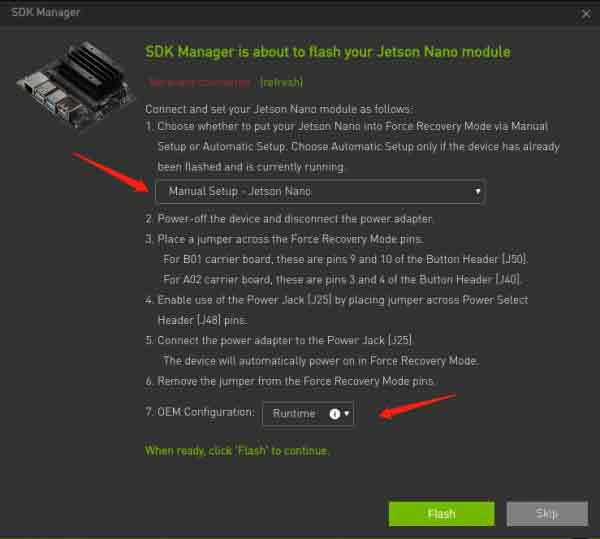

- Starting from JetPack version 4.6.1, the pre-config window will pop up when programming the system with SDK Manager, here according to the figure below.

Note: The Pre-config option in 7. OME Configuration is to set the username and password in advance, and the Runtime is to set the username and password during the boot configuration of the Jetson Nano. - Enter the password of the virtual machine, wait for the download, and the programming is finished.

- After the programming is finished, remove the jump cap of the bottom panel, connect to the monitor, power on it again, and follow the prompts to configure the boot (if it is a pre-config set, enter the system directly after powering on).

- Open the terminal on the ubuntu virtual machine or host and create a new folder

sudo mkdir sources_nano cd sources_nano

- Download path

https://developer.nvidia.com/embedded/l4t/r32_release_v7.2/t210/jetson-210_linux_r32.7.2_aarch64.tbz2 https://developer.nvidia.com/embedded/l4t/r32_release_v7.2/t210/tegra_linux_sample-root-filesystem_r32.7.2_aarch64.tbz2

Move the Jetpack to a folder and extract it (in practice, try to use the tab button to automatically complete the instructions).

sudo mv ~/Downloads/Jetson-210_Linux_R32.7.2_aarch64.tbz2 ~/sources_nano/ sudo mv ~/Downloads/Tegra_Linux_Sample-Root-Filesystem-R32.7.2_aarch64.tbz2 ~/sources_nano/

- Unzip resource

sudo tar -xjf Jetson-210_Linux_R32.7.2_aarch64.tbz2 cd Linux_for_Tegra/rootfs/ sudo tar -xjf .. /.. /Tegra_Linux_Sample-Root-Filesystem_R32.7.2_aarch64.tbz2 cd .. / sudo ./apply_binaries.sh (If an error occurs, follow the prompts and re-enter the instruction).

- Jetson Nano board

- Ubuntu virtual machine (or host computer)

- 5V 4A power adapter

- Jumper caps (or DuPont cable)

- USB Data cable (Micro USB interface, can transfer data)

- Short-connect the FC REC and GND pins with a jump cap or DuPont wire, located below the core board, as shown below.

- Connect the DC power supply to the round power supply port and wait a while.

- Connect the Jetson Nano's Micro USB port to the Ubuntu host with a USB cable (note the data cable).

- Programming system, Jetson Nano needs to enter recovery mode and connect to the Ubuntu computer.

cd ~/sources_nano/Linux_for_Tegra sudo ./flash.sh jetson-nano-emmc mmcblk0p1

- After the programming is finished, remove the jumping cap of the bottom panel, connect to the monitor, power on it again, and follow the prompts to configure the boot (if it is a pre-config set, enter the system directly after powering on).

- You need to start the system on the EMMC in the module first, and then the system of the module will be booted to the USB flash drive or the TF card to boot.

- The system in the module can use the SDK Manager in the virtual machine to program the system; the TF card system can program the system with Win32DiskImager; the system in the USB flash drive uses the virtual machine to program the system.

- Before booting the USB flash drive or TF card, you need to make sure the EMMC system has been programmed.

- Jetson Nano board

- Ubuntu18.04 virtual machine (or computer host)

- 5V/4A power adapter

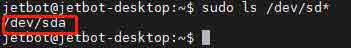

- Connect the USB flash drive to the Jetson Nano, check the device number of the USB flash drive, such as sda, open the Jetson Nano terminal and enter.

ls /dev/sd*

- Format the USB flash drive.

sudo mkfs.ext4 /dev/sda

Only SDA remains, as shown below:

- Modify the startup path.

sudo vi /boot/extlinux/extlinux.conf

Find the statement APPEND ${cbootargs} quiet root=/dev/mmcblk0p1 rw rootwait rootfstype=ext4 console=ttyS0,115200n8 console=tty0, modify mmcblk0p1 to sda. - Mount the USB flash drive.

sudo mount /dev/sda /mnt

- Copy the system to the USB flash drive (the process has no information to print, please wait patiently).

sudo cp -ax / /mnt

- After the copy is completed, uninstall the USB flash drive (not unplug the USB flash drive).

sudo umount /mnt/

- Restart the system.

sudo reboot

- Jetson Nano board.

- 5V 4A power adapter.

- Install the DTC software on the virtual machine.

sudo apt-get install device-tree-compiler

- Enter the HW Imager kernel path and decompile the dts source file. If you are using the SDK Manager software, use the following command:

cd ~/nvidia/nvidia_sdk/JetPack_4.6_Linux_JETSON_NANO_TARGETS/Linux_for_Tegra/kernel/dtb #You can modify the path for different jetpacks dtc -I dtb -O dts -o tegra210-p3448-0002-p3449-0000-b00.dts tegra210-p3448-0002-p3449-0000-b00.dtb

If you are using a resource pack, use the following command:

cd sources_nano/Linux_for_Tegra/kernel/dtb dtc -I dtb -O dts -o tegra210-p3448-0002-p3449-0000-b00.dts tegra210-p3448-0002-p3449-0000-b00.dtb

- Modify the device tree.

sudo vim tegra210-p3448-0002-p3449-0000-b00.dts

- Find the sdhci@700b0400 section, change status = "disable" to okay, and add TF information below.

cd-gpios = <0x5b 0xc2 0x0>; sd-uhs-sdr104; sd-uhs-sdr50; sd-uhs-sdr25; sd-uhs-sdr12; no-mmc; uhs-mask = <0xc>;

- Compile dtb files.

dtc -I dts -O dtb -o tegra210-p3448-0002-p3449-0000-b00.dtb tegra210-p3448-0002-p3449-0000-b00.dts

- To program the system, Jetson Nano needs to enter recovery mode and connect to the Ubuntu computer.

If you are using the SDK Manager software, use the following command:cd ~/nvidia/nvidia_sdk/JetPack_4.6_Linux_JETSON_NANO_TARGETS/Linux_for_Tegra sudo ./flash.sh jetson-nano-emmc mmcblk0p1

If you are using a resource pack, use the following command:

cd sources_nano/Linux_for_Tegra sudo ./flash.sh jetson-nano-emmc mmcblk0p1

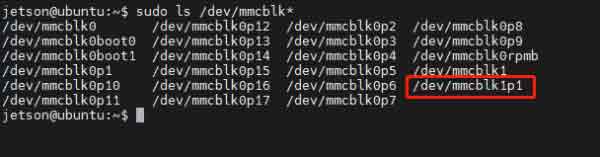

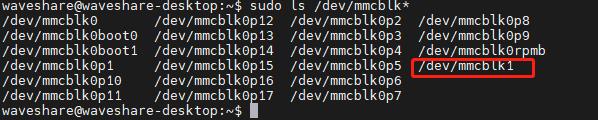

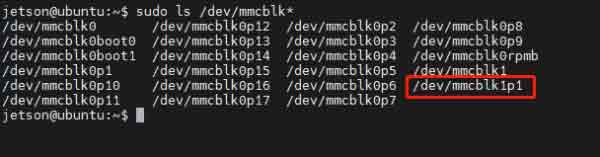

- Check if the SD card is identified:

sudo ls /dev/mmcblk*

- If the mmcblk1p1 device is recognized, the SD card is recognized normally.

- Format the SD card.

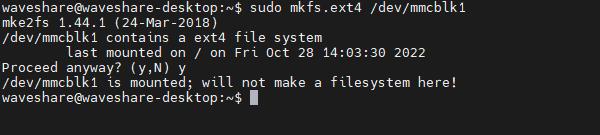

sudo mkfs.ext4 /dev/mmcblk1

If the following message appears, a file system is already available.

Unmount the SD card first:sudo umount /media/ (here press the Tab key to complete automatically).

Format the SD card again using the format command.

After successful formatting, enter:sudo ls /dev/mmcblk*

There is only mmcblk1, as shown below.

- Modify the startup path.

sudo vi /boot/extlinux/extlinux.conf

Find the statement APPEND ${cbootargs} quiet root=/dev/mmcblk0p1 rw rootwait rootfstype=ext4 console=ttyS0,115200n8 console=tty0, modify mmcblk0p1 to mmcblk1 to save. - Mount the SD card.

sudo mount /dev/mmcblk1 /mnt

- Copy the system to the SD card (the process has no information to print, please wait patiently).

sudo cp -ax / /mnt

- After the copy is complete, uninstall the SD card (not unplug the SD card).

sudo umount /mnt/

- Restart the system.

sudo reboot

- Jetson Nano board.

- 5V 4A power adapter.

- Install the DTC software on the virtual machine.

sudo apt-get install device-tree-compiler

- Enter the HW Imager kernel path and decompile the dts source file (modify the corresponding path for different jetpacks).

cd ~/nvidia/nvidia_sdk/JetPack_4.6_Linux_JETSON_NANO_TARGETS/Linux_for_Tegra/kernel/dtb dtc -I dtb -O dts -o tegra210-p3448-0002-p3449-0000-b00.dts tegra210-p3448-0002-p3449-0000-b00.dtb

- Modify the device tree.

sudo vim tegra210-p3448-0002-p3449-0000-b00.dts

- Find the sdhci@700b0400 section, change status = "disable" to okay, and add TF information below.

cd-gpios = <0x5b 0xc2 0x0>; sd-uhs-sdr104; sd-uhs-sdr50; sd-uhs-sdr25; sd-uhs-sdr12; no-mmc; uhs-mask = <0xc>;

- Compile dtb files.

dtc -I dts -O dtb -o tegra210-p3448-0002-p3449-0000-b00.dtb tegra210-p3448-0002-p3449-0000-b00.dts

- Programming system, Jetson Nano needs to enter recovery mode and connect to the Ubuntu computer.

cd ~/nvidia/nvidia_sdk/JetPack_4.6_Linux_JETSON_NANO_TARGETS/Linux_for_Tegra sudo ./flash.sh jetson-nano-emmc mmcblk0p1

- Disconnect the USB cable and jumping cap, and the Jetson Nano is powered on and configured.

- Check if the SD card is identified:

sudo ls /dev/mmcblk*

- If the mmcblk1p1 device is recognized, the SD card is recognized normally.

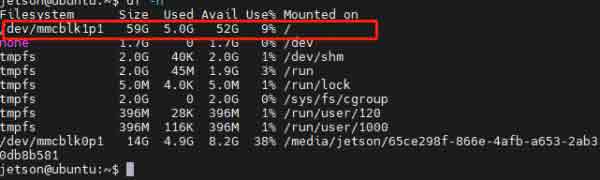

- Modify the boot system from the SD card (optional).

sudo vi /boot/extlinux/extlinux.conf

- Find the statement APPEND ${cbootargs} quiet root=/dev/mmcblk0p1 rw rootwait rootfstype=ext4 console=ttyS0,115200n8 console=tty0, change mmclk0p1 to mmcblk1p1, save, and then restart the system.

- If you are using the image we provide, the user name after rebooting the system is jetson, the password is: jetson, if it is the image installed by the user, it is the user name and password set by the user.

Note: If the SD card memory is 64G, after entering the system, open the terminal, and enter df -h to check the disk size if the space size is not normal, please refer to the expansion image in the FAQ.

- Use the image provided by Waveshare, the user name and user password are as follows:

- Please connect Jetson Nano by a network cable, and then connect the LAN port of the router with the other end of the cable.

- Please make sure Jetson Nano and your computer are under the same router or the network segment.

- Method 1: Log in to the router to find the IP of Jetson Nano.

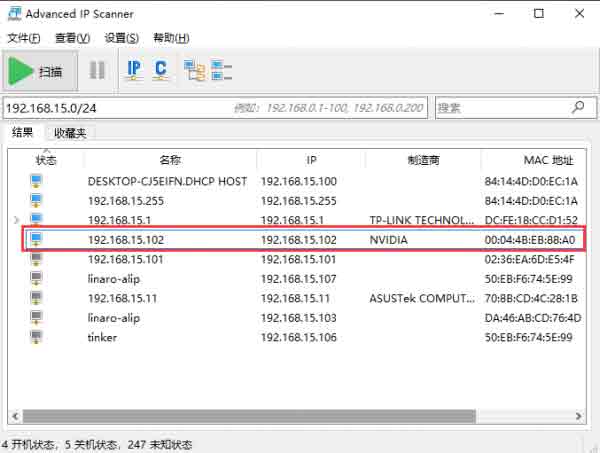

- Method 2: You can use some LAN IP scanning tools, here is an example of Advanced IP Scanner.

- Run Advanced IP Scanner.

- Click the "Scan" button to scan the IP in the current LAN.

- Find "all" IP with the word "NVIDIA" in Manufacture and then record.

- 4. Power on the device and connect to the network.

- 5. Please click the "Scan" button again to scan the IP in the current LAN.

- 6. Exclude all the IP addresses with the word "NVIDIA" in the previously recorded Manufacturer, and the rest is your NVIDIA IP address.

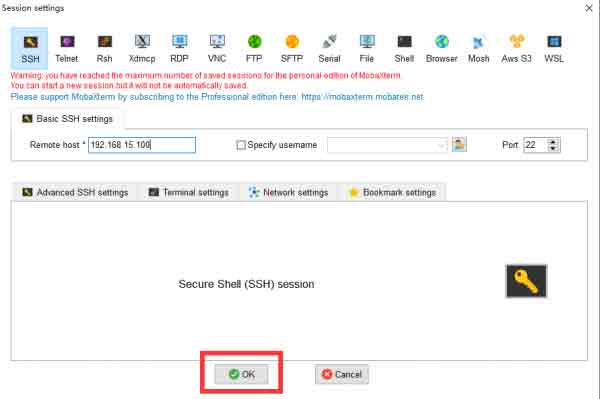

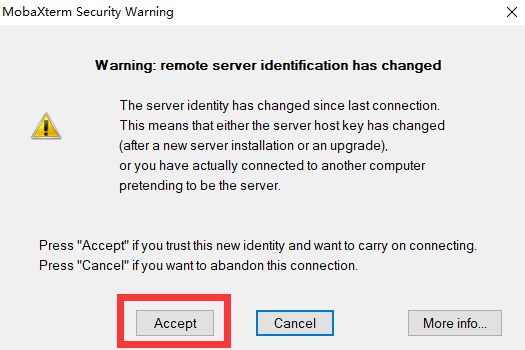

- Download MobaXterm and unzip it to use.

- Open XobaXterm, click Session and choose "ssh".

- Enter the IP address 192.168.15.102 we queried earlier in the Remote host (fill in according to your actual IP), after filling in, click ok.

- 4. Click "Accept". Waveshare provides the image login name: waveshare, enter the login password: waveshare (when entering the password, the screen does not change is a normal phenomenon, click Enter to confirm.)

- Compared with SSH and VNC, you may not know much about Nomachine. Nomachine is free remote desktop software.

- NoMachine basically covers all major operating systems, including Windows, Mac, Linux, iOS, Android and Raspberry, etc.

- Download and unzip NoMachine

- After unzipping, please use U disk or file transmission to copy ".deb" file to Jetson Nano.

- Install it with the following commands:

- Download NoMachine and then install. After clicking "Finish", you have to reboot your computer.

- In the absence of a display screen, if you want to enter the desktop of the Jetson Nano, you need to use the remote desktop to log in. (It is recommended to use the display screen, VNC has a certain delay).

- Jetson Nano uses vino as the default VNC server, but the default settings need some modifications.

- It should be noted that do not use sudo to run the above command, mypassword is the password to connect to VNC.

- Download and install VNC Viewer

- Power on the Nano normally.

- After the Jetson Nano enters the system and starts normally, connect the Micro USB port of the Jetson Nano to the Ubuntu host with a USB data cable.

- Run the sdkmanager command on the Ubuntu host computer to open SDK Managaer (SDK Manager needs to be installed first).

- Similar to the previous operation of programming the system, the difference is that in the step, instead of checking the OS option, check the SDK option, and then continue to the installation.

- After downloading the resource, a pop-up window will prompt you to fill in the username and password, just fill in the username and password of the nano system.

- Wait for the SDK to be installed successfully.

- The sudo command executes commands as a system administrator.

- To use the root user, log in as the waveshare user and execute the following command:

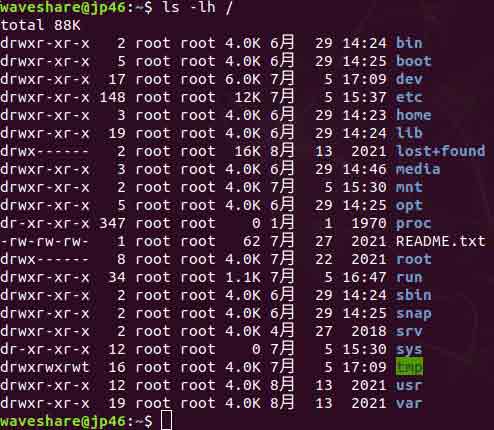

- The "ls" command is used to display the contents of the specified working directory (list the files and subdirectories contained in the current working directory).

- Common commands:

- To learn about more parameters of the command, we can use the "help" command to view:

- The chmod command is for users to control permissions on files.

- The file calling permissions of Linux/Unix is divided into three levels: file owner (Owner), user group (Group), and other users (Other Users).

- In the figure below, the detailed file information under the Linux root directory is displayed. Among these file information, the most important is the first column, which describes in detail the permissions of files and directories, while the third and fourth columns show which user or group the file and directory belong to.

- There are three file attributes in Linux: read-only (r), write (w), and executable (x). However, the above file attributes are divided into 10 small cells, because in addition to the first cell displaying the directory, the other three groups of three cells respectively represent the file owner permissions, permissions within the same group, and other user permissions.

- If d is displayed in the first column, it means that this is a directory; if it is a link file, l is displayed here; if it is a device file, c is displayed.

- The first rwx field: -rwx------ indicates the permissions of the file owner.

- The second rwx field: ---rwx--- indicates user rights within the same workgroup.

- The third rwx field: ------rwx indicates other user rights.

- E.g:

- -rwx rwx rwx means that no matter which user can read, write and execute this file.

- -rw- --- --- Indicates that only the file owner has read and write permissions, but no execute permissions.

- -rw -rw -rw means that all users have read and write rights.

- Symbolic mode

- who (user type)

- operator (symbol pattern table)

- permission (symbol pattern table)

- Symbolic Pattern Examples

- 1. Add read permissions to all users of the file:

- 2. Remove execute permission for all users of the file:

- 3. Add read and write permissions to all users of the file:

- 4. Add read, write and execute permissions to all users of the file:

- 5. Set read and write permissions to the owner of the file, clear all permissions of the user group and other users to the file (space means no permission):

- 6. Add read permission to the user for all files in the directory waveshare and its subdirectory hierarchy, and remove read permission for the user group and other users:

- Octal literals

- The chmod command can use octal numbers to specify permissions. The permission bits of a file or directory is controlled by 9 permission bits, each of which is a group of three, which are read, write, and execute for the file owner (User), read, write, and execute for the user group (Group). Other users (Other) read, write, and execute.

- For example, 765 is interpreted as follows:

- The owner's permission is expressed in numbers: the sum of the numbers of the owner's three permission bits. For example, rwx, which is 4+2+1, should be 7.

- The permissions of a user group are expressed in numbers: the sum of the numbers of the permission bits that belong to the group. For example, rw-, which is 4+2+0, should be 6.

- The permission of other users expressed in numbers: the sum of the numbers of other users' permission bits. For example, r-x, which is 4+0+1, should be 5.

- Commonly used digital permission:

- 400 -r------- The owner can read, no one else can operate;

- 644 -rw-r–r-- all owners can read, but only the owner can edit;

- 660 -rw-rw---- Both owner and group users can read and write, others cannot operate;

- 664 -rw-rw-r-- readable by everyone, but editable only by owner and group users;

- 700 -rwx------ The owner can read, write and execute, other users cannot do anything;

- 744 -rwxr–r-- everyone can read, but only the owner can edit and execute;

- 755 -rwxr-xr-x everyone can read and execute, but only the owner can edit;

- 777 -rwxrwxrwx Everyone can read, write and execute (this setting is not recommended).

- For example:

- Add read permissions to all users of the file, and the owner and group users can edit:

sudo chmod 664 file

- Add read permissions to all users of the file, and the owner and group users can edit:

- The touch command is used to modify the time attributes of a file or directory, including access time and change time. If the file does not exist, the system will create a new file.

- For example, in the current directory, use this command to create a blank file "file.txt" and enter the following command:

- The "mkdir" command is used to create directories.

- In the working directory, create a subdirectory named waveshare:

- Create a directory named waveshare/test in the working directory.

- If the waveshare directory does not already exist, create one. (Note: If the -p parameter is not added in this example, and the original waveshare directory does not exist, an error will occur.)

- Change the current working directory:

- The cp command is mainly used to copy files or directories.

- parameter:

- -a: This option is usually used when copying directories, it preserves links, file attributes, and copies everything under the directory. Its effect is equal to the combination of dpR parameters.

- -d: keep links when copying. The links mentioned here are equivalent to shortcuts in Windows systems.

- -f: Overwrite existing object files without prompting.

- -i: Contrary to the -f option, give a prompt before overwriting the target file, asking the user to confirm whether to overwrite and answer "y" the target file will be overwritten.

- -p: In addition to copying the contents of the file, also copy the modification time and access rights to the new file.

- -r: If the given source file is a directory file, all subdirectories and files in the directory will be copied.

- -l: Do not copy files, just generate link files.

- Use the command cp to copy all the files in the current directory test/ to the new directory "newtest", and enter the following command:

- The mv command is used to rename a file or directory or move a file or directory to another location.

- parameter:

- -b: When the target file or directory exists, create a backup of it before performing the overwrite.

- -i: If the specified moving source directory or the file has the same name as the target directory or file, it will first ask whether to overwrite the old file. Enter "y" to directly overwrite, and "n" to cancel the operation.

- -f: If the source directory or file specified to be moved has the same name as the target directory or file, it will not be asked, and the old file will be overwritten directly.

- -n: Do not overwrite any existing files or directories.

- -u: The move operation is performed only when the source file is newer than the target file or the target file does not exist.

- Use the command mv to copy the file1 file in the current directory test/ to the new directory /home/waveshare, and enter the following command:

- The rm command is used to delete a file or directory.

- parameter:

- -i: ask for confirmation one by one before deleting.

- -f: even if the original file attribute is set to read-only, it will be deleted directly without confirming one by one.

- -r: delete the files in the directory and below one by one.

- To delete a file, you can use the "rm" command directly. If you delete a directory, you must use the option "-r", for example:

sudo rm test.txt

- rm: delete the general file "test.txt"? y

sudo rm homework

- rm: cannot delete directory "homework": is a directory.

sudo rm -r homework

- rm: delete the directory "homework"? y

- The reboot command is used to restart the computer. Changing the configuration of Tinker Board 2 often requires restarting.

- parameter:

- -n: do not write the memory data back to the hard disk before rebooting.

- -w: don't actually reboot, just write the log to the /var/log/wtmp file.

- -d: do not write logs to the /var/log/wtmp file (-d is included with the -n parameter).

- -f: force reboot, do not call shutdown command.

- -i: stop all network related devices before rebooting.

- reboot

- When the Jetson Nano is turned off, you cannot directly unplug the power cord, because the Tinker Board 2 will use the memory as a temporary storage area. If you directly unplug the power cord, some data in the memory will not have time to be written to the SD card, resulting in data loss. The data on the SD card is lost or damaged, causing the system to fail to boot.

- Parameter:

- -t seconds: set the shutdown procedure after a few seconds.

- -k: don't actually shut down, just send a warning message to all users.

- -r: reboot after shutdown.

- -h: shutdown after shutdown.

- -n: do not use the normal program to shut down, use the forced method to kill all the programs in execution and then shut down by itself.

- -c: cancel the shutdown action that is currently in progress.

- -f: do not do fsck when shutting down (check Linux file system).

- -F: force fsck action on shutdown.

- time: Set the shutdown time.

- message: The warning message is sent to all users.

- Example:

- Shut down now.

sudo shutdown -h now

- Shutdown after specified 10 minutes.

sudo shutdown -h 10

- restart the computer.

sudo shutdown -r now

- Shut down now.

- No matter which command is used to shut down the system, root user authority is required. If the user uses a common user such as linaro, the sudo command can be used to temporarily obtain root authority.

- The pwd command displays the name of the current working directory: on Jetson nano, typing "pwd" will output something like "/home/waveshare".

- The "head" command displays the beginning of the file, which can be used with "-n" to specify the number of lines to display (default is 10), or with "-c" to specify the number of bytes.

- The tail shows the end of the file. -c bytes or -n lines specify the starting point in the file.

- Displays available and used disk space on mounted filesystems. Use df -h to see the output in a readable format, use M for MB instead of bytes.

- The "tar" command is a tool program used to create and restore backup files. It can add and unpack files in backup files.

- Compressed file:

- unzip files:

- "apt" (Advanced Packaging Tool) is a shell front-end package manager in Debian and Ubuntu.

- The "apt" command provides commands for finding, installing, upgrading, and removing a package, a group of packages, or even all packages, and the commands are concise and easy to remember.

- "apt" command execution requires super administrator privileges (root).

- "apt" common commands:

- List all updatable software inventory commands: sudo apt update.

- Upgrade packages: sudo apt upgrade.

- List updatable packages and version information: apt list --upgradeable.

- Upgrade packages, delete the packages that need to be updated before upgrading: sudo apt full-upgrade.

- Install the specified software command: sudo apt install <package_name>.

- Install multiple packages: sudo apt install <package_1> <package_2> <package_3>.

- Update the specified software command: sudo apt update <package_name>.

- Display package specific information, such as version number, installation size, dependencies, etc.: sudo apt show <package_name>.

- Remove package command: sudo apt remove <package_name>.

- Clean up unused dependencies and libraries: sudo apt autoremove.

- Remove packages and configuration files: sudo apt purge <package_name>.

- Find packages command: sudo apt search <keyword>.

- List all installed packages: apt list --installed.

- List version information of all installed packages: apt list --all-versions.

- For example, we install nano editor.

- Used to display the network configuration details of an interface on the current system when run with no arguments (ie) ifconfig.

- When connecting with SSH, you can find the IP address through ifconfig, and enter the terminal:

- Check the IP address of the wired network, input the terminal:

- Check the IP address of the wireless network and enter the terminal:

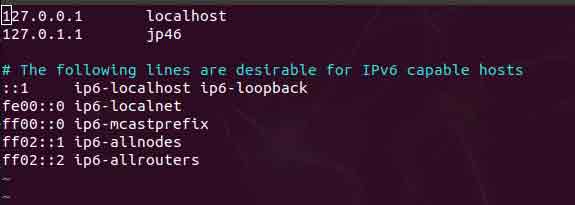

- The hostname command displays the current hostname of the system. When we use Jetson Nano, we often need to use remote tools, and the default network configuration IP address adopts dynamic allocation, which will cause the problem of IP address uncertainty.

- When the IP address of our Jetson Nano changes, it is possible to log in using the hostname.

- Replace jp46 with the name to be modified, such as Waveshare, press the keyboard ZZ to save and exit:

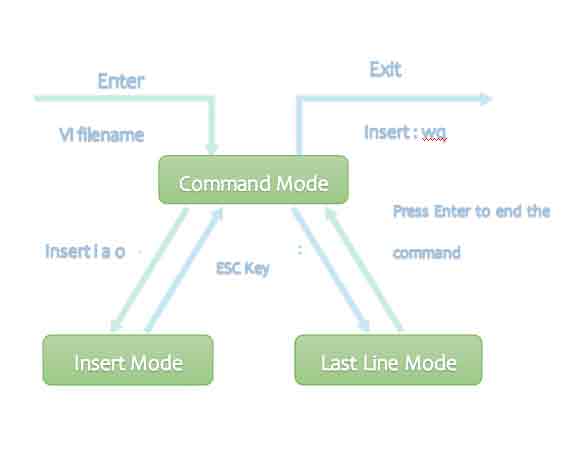

- The Vim editor is a standard editor under all Unix and Linux systems. Its power is not inferior to any of the latest text editors. Here is just a brief introduction to its usage and common commands.

- Basically vim is divided into three modes, namely command mode (Command mode), input mode (Insert mode), and **bottom line command mode (Last line mode).

- Command mode: control the movement of the screen cursor, delete characters, words or lines, move and copy a section.

- Input Mode: Input characters and edit files in this mode.

- Bottom line mode: save the file or exit vim, you can also set the editing environment, such as finding strings, **listing line numbers, etc.

- We can think of these three modes as the icons at the bottom:

- Open file, save, close file (use in vi command mode):

- Insert text or line (used in vi command mode, after executing the following command, it will enter insert mode, press ESC key to exit insert mode:

- Delete and restore characters or lines (used in vi command mode):

- Copy and paste (used in vi command mode):

- Transferring files with MobaXterm tool is very simple and convenient.

- To transfer files from Windows to Raspberry Pi simply drag the files to the left directory of MobaXterm.

- Similarly, to transfer Raspberry Pi files to Windows, just drag the files in the left directory of MobaXterm to Windows.

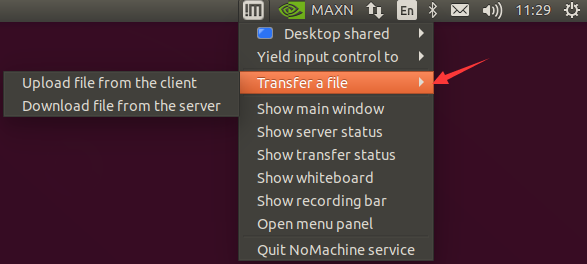

- When the NoMachine remote connection is successful, the NoMachine icon will appear in the upper right corner of the Jetson Nano desktop. We click the icon and select Transfer a file.

- Upload file from the client is to transfer files from a Windows computer to the Jetson Nano.

- Download file from the server is to transfer files from Jetson Nano to Windows computer.

- The SCP command can be used to securely copy or encrypt transfer files and directories across Linux systems.

- Format:

- First enter the directory where you want to store the file, hold down the keyboard Shift and right-click the blank space to open Windows PowerShell.

- Copy the file from the Jetson Nano to the local Windows and enter it in the terminal:

- Copy the file from the local Windows to the Jetson Nano and enter it in the terminal:

- Copy the file folder from the Jetson Nano to the local Windows. Since the file is a directory, you need to add the parameter r and enter it in the terminal.

- Copy the file folder from the local Windows to the Jetson Nano and type in the terminal:

- Create a new blank file with the .img suffix on the Windows computer desktop. Insert the SD card with the system image and select the drive letter of the corresponding SD card.

- Open Win32DiskImager, click "Read", and then convert Jetson Nano's SD card file to an image.

- After the first successful login, we want to connect to WiFi later.

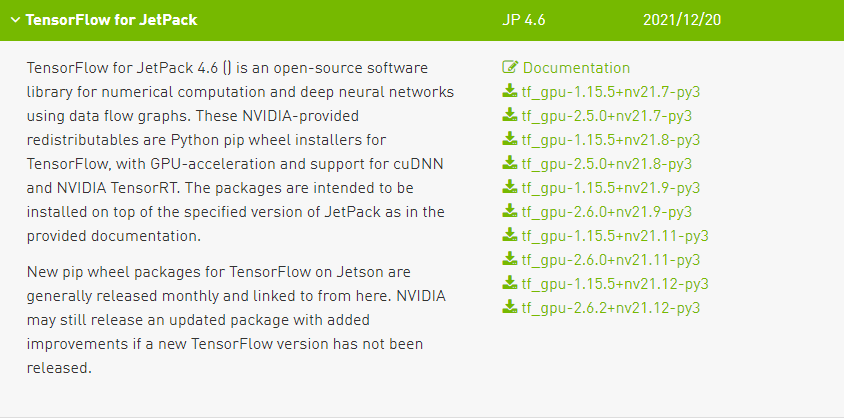

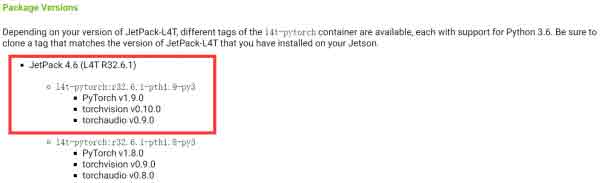

- This tutorial is based on the JetPack4.6 system image, the Python version is Python3.6, the TensorFlow version is 2.5.0, and the Pytorch version is 1.9.0 as an example.

- Note: The TensorFlow version and the Pytorch version must correspond to the JetPack version.

- Under normal circumstances, Jetson Nano will install CUDA by default, but I have also encountered a situation where CUDA is not installed by default. The solution is as follows:

- Transfer the installation package to Jetson Nano and add the downloaded key to the local trusted database:

- Install CUDA Toolkit and CUDA:

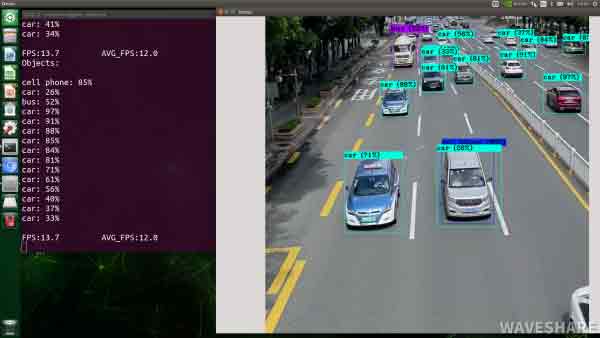

- There are three basic reasoning methods: picture, video, camera (live image).

- Choose Yolo v4 and Yolo v4-tiny (more lightweight models, suitable to run on Jetson Nano) for testing. First, you need to download the trained model weight file.

- For the excellent YOLOv4 algorithm, running on Jetson Nano, and the FPS is low.

- The Hello AI World project integrates the powerful TensroRT acceleration engine in NVIDIA, which improves performance by several times.

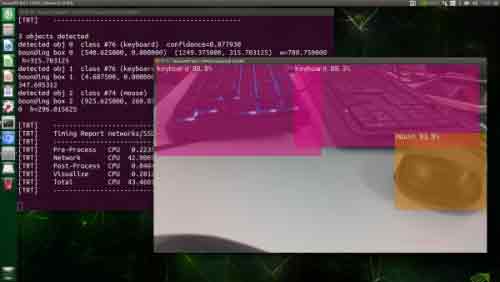

- Use the camera to recognize objects in the environment.

- The content below is a list of pre-trained object detection networks available for download, along with the "--network" parameter for loading the pre-trained model:

- Let's use the "detectnet" program to locate objects in a static image. In addition to input/output paths, there are some additional command line options:

- Change the optional "--network" flag for the detection model in use (the default is SSD-Mobilenet-v2).

- "--overlay" flag can be comma-separated boxes, lines, labels, conf, or none.

- Default "--overlay=box",labels,conf show boxes, labels and confidence coefficient.

- The box option draws filled bounding boxes, while lines only draw unfilled outlines.

- "--alpha" value, sets the alpha mixed value used during overlay (the default is 120).

- "--threshold" sets an optional value for the minimum threshold for detection (thedefault 0.5).

- "--camera" flag sets the camera device to use. (The default is to use MIPI CSI sensor 0 (--camera=0).)

- "--width" and "--height" flags set camera resolution (the default is 1280 x 720).

- The resolution should be set to a format supported by the camera, queried with "v4l2-ctl --list-formats-ext".

- The digital inputs and outputs of these GPIOs can be controlled using the Python library provided in the Jetson GPIO library package.

- The Jetson GPIO library provides all the public APIs provided by the RPi.GPIO library. The use of each API is discussed below.

- The Jetson GPIO library provides four methods for numbering I/O pins.

- The first two correspond to the modes provided by the RPi.GPIO library, namely BOARD and BCM, which refer to the pin number of the 40-pin GPIO header and the Broadcom SoC GPIO number respectively.

- The remaining two modes, CVM and TEGRA_SOC use strings instead of numbers, corresponding to the CVM/CVB connector and signal names on the Tegra SoC respectively.

- To specify the mode you use (mandatory), use the following function to call:

- To check which mode has been set, you can call:

- You can disable warnings with the following code:

- The GPIO channel must be set before it can be used as an input or output. To configure a channel as an input, please call:

- To set the channel as output, please call:

- You can also specify an initial value for the output channel:

- When you set a channel to an output, you can also set multiple channels at the same time:

- To read the value of a channel, please use:

- You can also output to a list of channels or tuple:

- If you don't want to clean up all channels, you can also clean up a single channel or a list of channels or tuple:

- To get information about Jetson modules use/read:

- To get information about library versions use/read:

- wait_for_edge() function

- This function blocks the calling thread until the provided edge is detected. The function can be called as follows:

- The second parameter specifies the edge to detect, which can be GPIO.RISING, GPIO.FALLING, or GPIO.BOTH. If you just want to limit the wait to a specified time, you can optionally set a timeout:

- event_detected() function

- This function can be used to periodically check if an event has occurred since the last call. The function can be set and called as follows:

- Callback function to run when an edge is detected.

- This function can be used to run a second thread for the callback function. Therefore, the callback function can run concurrently with your main program in response to the edge. This feature can be used as follows:

- Multiple callbacks can also be added if desired, like this:

- To prevent multiple invocations of the callback function by merging multiple events into one, optionally set a debounce time:

- Sample demo:

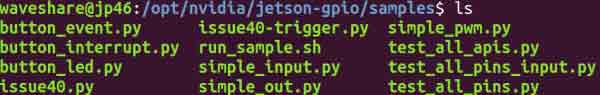

- For the jetson.gpio library, the official also provides some simple demos.

- First download jetson-gpio:

- Move the downloaded file to /opt/nvidia:

- Go to the jetson-gpio library folder and install the library:

- Before using it, you also need to create a gpio group, add your current account to this group, and give it permission to use.

- Copy the 99-gpio.rules file to the rules.d directory

- In order for the new rules to take effect, you need to reboot or reload the udev rules by running:

- After the configuration is complete, we can use the demo inside, such as "simple_input.py" can read the status of the pins.

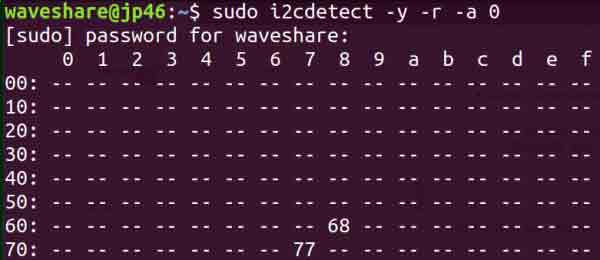

- Query i2c devices:

- Parameter: -y is to execute directly regardless of interaction problems, -r is the SMBus read byte command, -a is all addresses, and 0 refers to SMBus 0.

- Scan the register data:

- Write in the register data:

- Parameter:

- Register data read:

sudo i2cget -y 0 0x68 0x90

- parameter:

Method Two: Directly Download Jetpack

The following Jetpack download is based on Jetpack 4.6.2 as an example, for other Jetpack version resource pack download methods, please refer to the Jetpack download method in the FAQ.

Install System

Install Image on EMMC

Equipment preparation

Hardware Configuration (entering recovery mode)

System Programming

USB Flash Drive And TF Card Booting Principle

Boot USB Flash Drive (copy eMMC on the system)

Preparation

System Installation

Boot TF Card

Method 1: Copy the system directly on eMMC

Note: The operation will format the TF card.

Equipment preparation

Burn Boot Program

Method 2: Another Image on the SD Card

Equipment preparation

Burn Boot Program

Login

Offline Login

Username: waveshare User password: waveshare

Remote Login

Preparation

Get Jetson Nano IP

Log in with MobaXterm

Terminal Window

Nomachine Login

Install on Jetson Nano

sudo dpkg -i nomachine_7.10.1_1_arm64.deb

Install on Windows PC

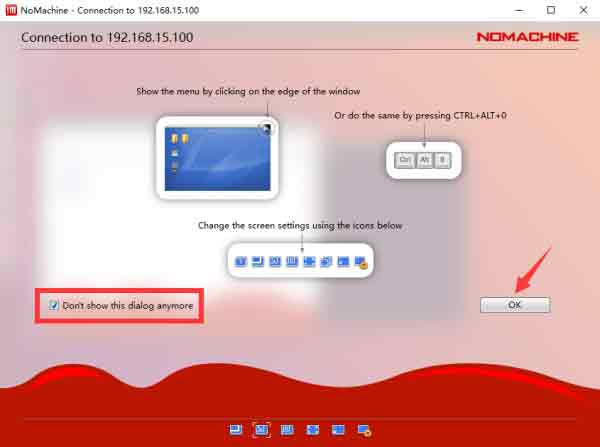

Connect to Jetson Nano

1. Open NoMachine and then enter the IP of Jetson Nano in the "Search" bar. For example, "192.168.15.100".

2. Click "Connect to new hos 192.168.15.100", and then enter the username and password of Jetson Nano. Click "login".

3. After loading, there is an interface for software introduction, and we just need to click "OK".

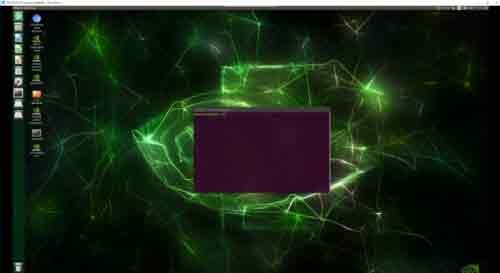

4. By now, we can log in to Jetson Nano successfully.

VMC Login

Configure the VNC Server

1. Configure VNC Server:

gsettings set org.gnome.Vino require-encryption false gsettings set org.gnome.Vino prompt-enabled false gsettings set org.gnome.Vino authentication-methods "['vnc']" gsettings set org.gnome.Vino lock-screen-on-disconnect false gsettings set org.gnome.Vino vnc-password $(echo -n "mypassword"|base64)

2. Set the desktop to start automatically at boot, and create a new self-starting file in the .config path.

mkdir -p .config/autostart sudo vim ~/.config/autostart/vino-server.desktop

Add the following content:

[Desktop Entry] Type=Application Name=Vino VNC server Exec=/usr/lib/vino/vino-server NoDisplay=true

3. Check what manager you are currently using:

cat /etc/X11/default-display-manager

4. Edit the file:

sudo vim /etc/gdm3/custom.conf

5. Remove the comments on the following three lines, and modify the AutomaticLogin line to your own username.

WaylandEnable=false AutomaticLoginEnable = true AutomaticLogin = waveshare

6. Reboot Jetson Nano:

sudo reboot

Download and Install VNC Viewer

Remotely connect to Jetson Nano using VNC Viewer

1. Open VNC Viewer, enter the IP address of Jetson Nano and press Enter to confirm. For example:

192.168.15.102

2. Enter the VNC login password set earlier and click "Ok":

3. At this point, you have successfully logged in to Jetson Nano.

SDK Installation

When the previous system is installed, only the basic system is installed. Other JetPack SDK components, such as CUDA, need to be further installed after the system starts normally. Here are the steps to install the SDK.

SDK Manager Installation

When using the SDK Manager to install the SDK, you do not need to set the nano to recovery mode, that is, you do not need to short-circuit the pins.

Using Command To Install

Users without ubuntu direct computer can choose to install directly on Jetson Nano using the following instructions.

sudo apt update sudo apt install nvidia-jetpack

Linux Basic Operation

Common Command Introduction

File System

sudo

sudo su #Switch to super user su waveshare #Switch common users

ls

ls ls -a #Show all files and directories (hidden files starting with . are also listed) ls -l #In addition to the file name, it also lists the file type, permissions, owner, file size and other information in detail ls -lh #file sizes are listed in an easy-to-understand format, e.g. 4K

ls --help

chmod

| who | User Type | Description |

| u | user | file owner |

| g | group | The file owner's group |

| o | others | All other users |

| a | all | User used, equivalent to ugo |

| Operator | Description | |

| + | Increase permissions for the specified user type | |

| - | Remove the permission of the specified user type | |

| = | Set the settings of the specified user permissions, that is to reset all permissions of the user type |

| Mode | Name | Description |

| r | read | set to read permission |

| w | write | set to writable permission |

| x | Execute permission | Set as executable permission |

| X | Special execution permission | Set the file permission to be executable only when the file is a directory file, or other types of users have executable permission |

| s | setuid/gid | When the file is executed, set the file's setuid or setgid permissions according to the user type specified by the who parameter |

| t | paste bit | set the paste bit, only superuser can set this bit, only file owner u can use this bit |

chmod a+r file

chmod a-x file

chmod a+rw file

chmod +rwx file

chmod u=rw,go= file

chmod -R u+r,go-r waveshare

| # | Permissions | rwx | Binary |

| 7 | Read + Write + Execute | rwx | 111 |

| 6 | Read + Write | rw- | 110 |

| 5 | read + execute | rwx | 101 |

| 4 | Read only | r-- | 100 |

| 3 | Write + Execute | -wx | 011 |

| 2 | Write only | -w- | 010 |

| 1 | Only execute | --x | 001 |

| 0 | None | --- | 000 |

touch

touch file.txt

mkdir

sudo mkdir waveshare

sudo mkdir -p waveshare/test

cd

cd .. #Return to the previous directory cd /home/waveshare #Enter /home/waveshare directory cd #return to user directory

cp

sudo cp –r test/ newtest

mv

sudo mv file1 /home/waveshare

rm

reboot

sudo reboot

shutdown

pwd

head

head test.py -n 5

tail

df

df -h

tar

tar -cvzf waveshare.tar.gz *

tar -xvzf waveshare.tar.gz

apt

sudo apt install nano

Network

ifconfig

ifconfig

ifconfig eth0

ifconfig wlan0

hostname

1. Log in to Jetson Nano, and modify the host file, the command is as follows:

sudo vim /etc/hosts

2. Modify the hostname file, and replace the jp46 here with the name to be modified, such as waveshare, and press the keyboard ZZ:

sudo vim /etc/hostname

3. After the modification is completed, restart the Jetson Nano:

sudo reboot

4. We can also check the IP address with the following command:

hostname -I

Vim Editor User Guide

1. First remove the default Vi editor:

sudo apt-get remove vim-common

2. Then reinstall Vim:

sudo apt-get install vim

3. For convenience, you have to add the following three sentences after the /etc/vim/vimrc file:

set nu #display line number syntax on # syntax highlighting set tabstop=4 #tab back four spaces

Common Command

vim filename //Open the filename file

:w // save the file

:q //Quit the editor, if the file has been modified please use the following command

:q! //Quit the editor without saving

:wq //Exit the editor and save the file

:wq! //Force quit the editor and save the file

ZZ //Exit the editor and save the file

ZQ //Exit the editor without saving

a //Add text to the right of the current cursor position i //Add text to the left of the current cursor position A //Add text at the end of the current line I //Add text at the beginning of the current line (the beginning of the line with a non-empty character) O //Create a new line above the current line o //Create a new line below the current line R //Replace (overwrite) the current cursor position and some text behind it J //Merge the line where the cursor is located and the next line (still in command mode)

x // delete the current character nx // delete n characters from the cursor dd // delete the current line ndd //Delete n lines including the current line down u //Undo the previous operation U //Undo all operations on the current row

yy //Copy the current line to the buffer nyy //Copy the current line down n lines to the buffer yw //Copy the characters from the cursor to the end of the word nyw //Copy n words starting from the cursor y^ //Copy the content from the cursor to the beginning of the line y$ //Copy from cursor to end of line p //Paste the contents of the clipboard after the cursor P //Paste the contents of the clipboard before the cursor

Configuration

File transmission

This tutorial takes a Windows system to remotely connect to a Linux server as an example. There are multiple ways to upload local files to the server.

MobaXterm File Transmission

NoMachine File Transmission

SCP File Transmission

scp +parameter +username/login name+@+hostname/IP address+ : + target file path+local storage path

scp waveshare@192.168.10.80:file .

Where "." represents the current path.

scp file waveshare@192.168.10.80:

scp -r waveshare@192.168.10.80:/home/pi/file .

scp -r file waveshare@192.168.10.80:

Note: The above waveshare needs to be changed to the username of your system, and the IP address to the actual IP address of Jetson Nano.

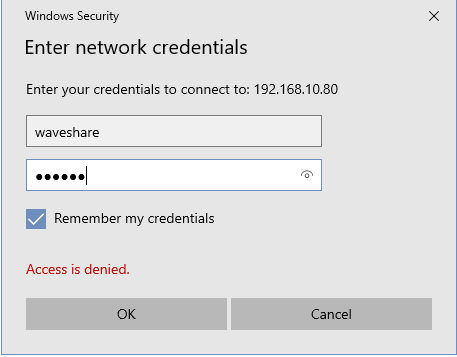

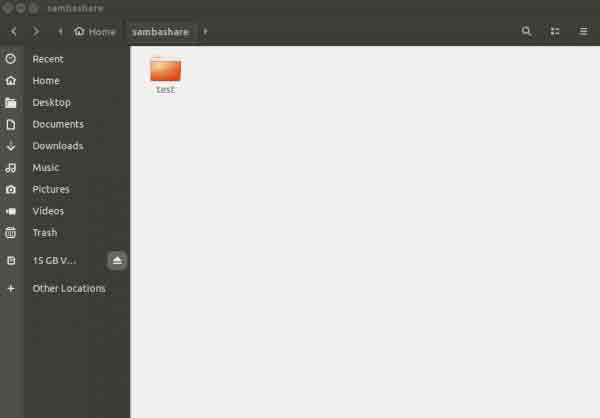

File sharing (Samba)

File sharing is possible using the Samba service. The Jetson Nano file system can be accessed in the Windows Network Neighborhood, which is very convenient.

1. First install Samba, enter into the terminal:

sudo apt-get update sudo apt-get install samba -y

2. Create a shared folder sambashare in the /home/waveshare directory.

mkdir sambashare

3. After the installation is complete, modify the configuration file /etc/samba/smb.conf:

sudo nano /etc/samba/smb.conf

Pull to the end of the file and add the following statement to the end of the file.

[sambashare]

comment = Samba on JetsonNano

path = /home/waveshare/sambashare

read only = no

browsable = yes

Note: waveshare here needs to be changed to your system username. In other words, path is the shared folder path you want to set.

4. Restart the Samba service.

sudo service smbd restart

5. Set shared folder password:

sudo smbpasswd -a waveshare

Note: The username here needs to be changed to the username of your system. If it is not the username, it will fail.

You will be asked to set a Samba password here. It is recommended to use your system password directly, which is more convenient to remember.

6. After the setup is complete, on your computer, open the file manager.

\\192.168.10.80\sambashare

7. Enter the login name and password set in step 5 earlier.

8. Let's verify, create a new test folder in windows, and you can see the test folder in the Jetson Nano sambashare directory.

System Backup

Camera

View the first connected camera screen:

nvgstcapture-1.0

View the picture of the second camera connected:

nvgstcapture-1.0 --sensor-id=1

FAN

Fan speed adjustment, note that 4 wires are required to debug the fan.

sudo sh -c 'echo 255 > /sys/devices/pwm-fan/target_pwm' #Where 255 is the maximum speed, 0 is stop, modify the value to modify the speed cat /sys/class/thermal/thermal_zone0/temp #Get the CPU temperature, you can intelligently control the fan through the program #The system comes with a temperature control system, and manual control is not required in unnecessary situations

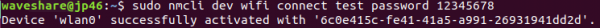

Wi-Fi connection without display (applicable to packages with wireless network card)

1. Scan WIFI.

sudo nmcli dev wifi

2. Connect to the WIFI network ("wifi_name" and "wifi_password" need to be replaced with the SSID and password of your actual WiFi.)

sudo nmcli dev wifi connect "wifi_name" password "wifi_password"

3. If "successfully" is displayed, the wireless network is successfully connected, and the motherboard will automatically connect to the WiFi you specified next time it is powered on.

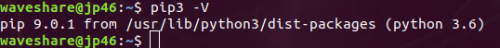

Getting Started with AI

PIP Installation

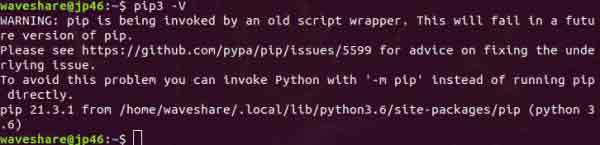

1. Python3.6 version is installed by default in Jetson Nano, directly install PIP.

sudo apt update sudo apt-get install python3-pip python3-dev

2. After the installation is complete, we check the PIP version.

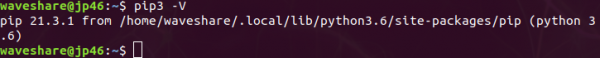

pip3 -V

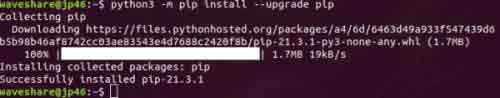

3. The default installed PIP is version 9.01, you need to upgrade it to the latest version.

python3 -m pip install --upgrade pip

4. After the upgrade is successful, check the pip version information and find some problems.

pip3 -V

5. We use the command to solve it as follows:

python3 -m pip install --upgrade --force-reinstall pip sudo reboot

6. Install important installation packages in the field of machine learning.

Install important packages in the field of machine learning sudo apt-get install python3-numpy sudo apt-get install python3-scipy sudo apt-get install python3-pandas sudo apt-get install python3-matplotlib sudo apt-get install python3-sklearn

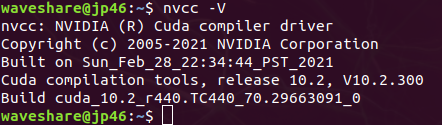

Set up the CUDA environment

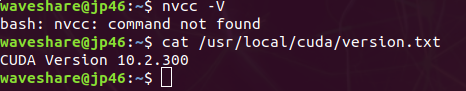

1. Check the CUDA version, if there appears "command not found", you need to configure the environment.

nvcc -V cat /usr/local/cuda/version.txt

Note: If you use the "cat" command, you can not check the version here. Please enter the "/usr/local/" directory to see if there is a CUDA directory.

If you do not install CUDA by referring to the Uninstalled CUDA section below, configure the environment after the installation is complete.

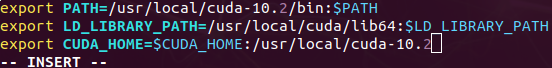

2. Set environment variables:

sudo vim .bashrc Add at the end of the file: export PATH=/usr/local/cuda-10.2/bin:$PATH export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH export CUDA_HOME=$CUDA_HOME:/usr/local/cuda-10.2

3. Update environment variables.

source .bashrc

4. Check the CUDA version again.

nvcc -V

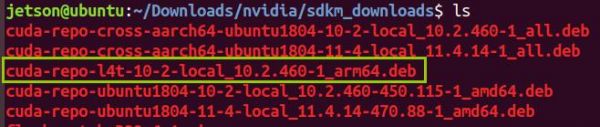

CUDA is not installed on Jetson Nano

1. Open the SDK Manager on the Ubuntu 18.04 computer, skip to step 2, and download CUDA; after the download is complete, find the CUDA installation package.

cd /Downloads/nvidia/sdkm_downloads

sudo apt-key add /var/cuda-repo-10-2-local/7fa2af80.pub

sudo apt update sudo apt install cuda-toolkit-10-2 sudo dpkg -i cuda-repo-l4t-10-2-local_10.2.460-1_arm64.deb

Tensorflow GPU Environment Construction

1. Install the needed package:

sudo apt-get install libhdf5-serial-dev hdf5-tools libhdf5-dev zlib1g-dev zip libjpeg8-dev liblapack-dev libblas-dev gfortran sudo pip3 install -U pip testresources setuptools==49.6.0

2. Install python independencies:

sudo pip3 install -U --no-deps numpy==1.19.4 future==0.18.2 mock==3.0.5 keras_preprocessing==1.1.2 keras_applications==1.0.8 gast==0.4.0 protobuf pybind11 cython pkgconfig packaging sudo env H5PY_SETUP_REQUIRES=0 pip3 install -U h5py==3.1.0

3. Install Tensorflow (online installation often fails, you can refer to step 4 for offline installation).

sudo pip3 install --pre --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v46 tensorflow

4. Finally, it is recommended to install offline, first log in to NVIDIA's official website to download the TensorFlow installation package (take "jetpack4.6 TensorFlow2.5.0 nv21.08" as an example, it is recommended to use Firefox browser to download).

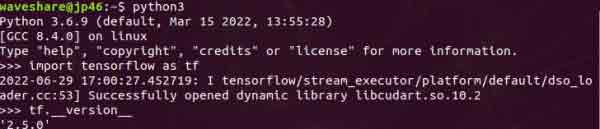

5. After the installation is complete, check whether the installation is successful, enter into the terminal:

python3 import tensorflow as tf

6. View the version information:

tf.__version__

Pytorch Environment Setting

Pytorch Installation

1. Login and download Pytorch. Here, we take Pytorch v1.9.0 as an example:

2. Download the independencies libraries.

sudo apt-get install libjpeg-dev zlib1g-dev libpython3-dev libavcodec-dev libavformat-dev libswscale-dev libopenblas-base libopenmpi-dev

3. Install Pytorch

sudo pip3 install torch-1.9.0-cp36-cp36m-linux_aarch64.whl

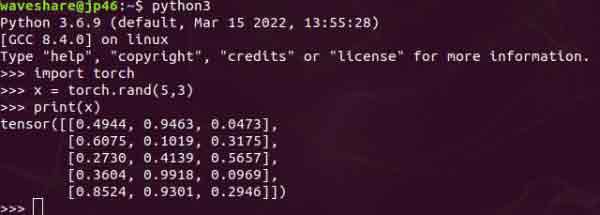

4. Verify whether Pytorch has been installed successfully.

python3 import torch x = torch.rand(5, 3) print(x)

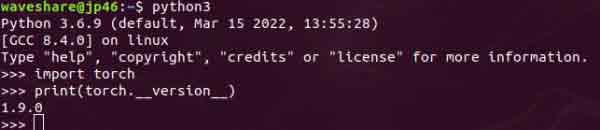

5. View the version information:

import torch print(torch.__version__)

Torchvision Installation

1. The Torchvision version should match the Pytorch version. The Pytorch version we installed earlier is 1.9.0, and Torchvision installs the v0.10.0 version.

2. Download and install torchvision:

git clone --branch v0.10.0 https://github.com/pytorch/vision torchvision cd torchvision export BUILD_VERSION=0.10.0 sudo python3 setup.py install

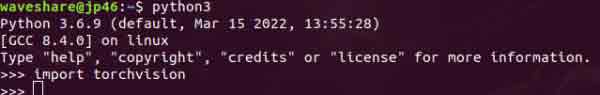

3. Verify whether Torchvision is installed successfully.

python3 import torchvision

4.The error may be that the Pillow version is too high, uninstall and reinstall.

sudo pip3 uninstall pillow sudo pip3 install pillow

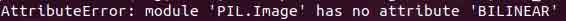

5. View the version information.

import torchvision print(torchvision.__version__)

Yolo V4 Environment Construction

1. First download darknet on github:

git clone https://github.com/AlexeyAB/darknet.git

2. After downloading, you need to modify Makefile:

cd darknet sudo vim Makefile

Change the first four lines of 0 to 1.

GPU=1 CUDNN=1 CUDNN_HALF=1 OPENCV=1

3. The cuda version and path should also be changed to our actual version and path, otherwise the compilation will fail:

Change NVCC=nvcc to NVCC=/usr/local/cuda-10.2/bin/nvcc

4. After the modification is completed, compile and enter into the terminal:

sudo make

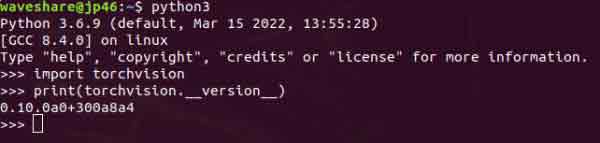

Inference with YOLOv4

Image Test

1. Test

./darknet detector test cfg/coco.data cfg/yolov4.cfg yolov4.weights data/dog.jpg

2. If you want to open the picture, you need to use the test mode, it will ask you to enter the location of the picture after execution:

./darknet detector test ./cfg/coco.data ./cfg/yolov4.cfg ./yolov4.weights

Video Test

1. Yolov4-tiny video detection (there is no video file in the data downloaded from github, and the user needs to upload the video file to be detected to the data folder).

./darknet detector demo cfg/coco.data cfg/yolov4-tiny.cfg yolov4-tiny.weights data/xxx.mp4

The frames is about 14 fps.

Live image test

1. Check the device number of the USB camera:

ls /dev/video* ./darknet detector demo cfg/coco.data cfg/yolov4-tiny.cfg yolov4-tiny.weights /dev/video0 ./darknet detector demo cfg/coco.data cfg/yolov4.cfg yolov4.weights /dev/video0

Hello AI World

Environment Construction

1. Install cmake.

sudo apt-get update sudo apt-get install git cmake libpython3-dev python3-numpy

2. Get the jetson-inference open source project.

git clone https://github.com/dusty-nv/jetson-inference cd jetson-inference git submodule update --init

3. Create a new folder, compile:

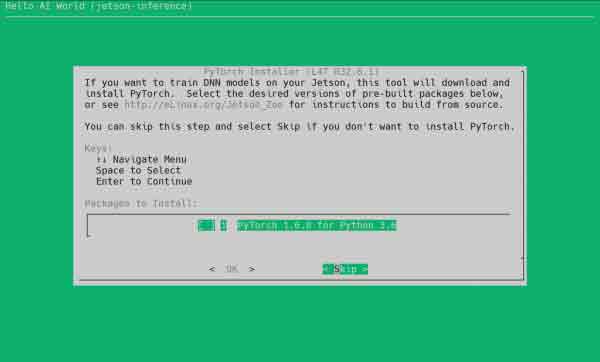

sudo mkdir build cd build sudo cmake ../

When the download model and Pytorch interface appear, we choose to skip (Quit and skip).

4. Download the model, then place it in the "jetson-inference/data/networks" directory, and unzip it.

5. Copy files to Jetson Nano with U disk or #MobaXterm File Transmission.

cd jetson-inference/build sudo make sudo make install

6. Install v4l camera driver, input the following command in the terminal:

sudo apt-get install v4l-utils v4l2-ctl --list-formats-ext

DetectNet Runs Real-time Camera Detection

cd ~/jetson-inference/build/aarch64/bin/ ./detectnet-camera

Use TensorRT to accelerate the frame rate to 24 fps.

./detectnet-camera --network=facenet # Run the face recognition network ./detectnet-camera --network=multiped # run multi-level pedestrian/baggage detector ./detectnet-camera --network=pednet # Run the original single-stage pedestrian detector ./detectnet-camera --network=coco-bottle # Detect bottles/soda cans under camera ./detectnet-camera --network=coco-dog # detect dog under camera

./detectnet-camera --network=facenet # Use FaceNet, default MIPI CSI camera (1280 × 720) ./detectnet-camera --camera=/dev/video1 --network=facenet # Use PedNet, V4L2 camera /dev/video1 (1280 x 720) ./detectnet-camera --width=640 --height=480 --network=facenet # Use PedNet, default MIPI CSI camera (640 x 480)

Hardware Control

The Jetson TX1, TX2, AGX Xavier, and Nano development boards include a 40-pin GPIO header, which is similar to the 40-pin header in the Raspberry Pi.

GPIO

1. To import the Jetson.GPIO module, please use:

import Jetson.GPIO as GPIO

2. Pin number:

GPIO.setmode(GPIO.BOARD) GPIO.setmode(GPIO.BCM) GPIO.setmode(GPIO.CVM) GPIO.setmode(GPIO.TEGRA_SOC)

mode = GPIO.getmode()

The model must be GPIO. BOARD, GPIO. BCM, GPIO. CVM, GPIO. TEGRA_SOC or None. 3. If GRIO detects that a pin has been set to a non-default value, you will see a warning message.

GPIO.setwarnings(False)

4. Set the channel:

# (where channel is based on the pin numbering mode discussed above) GPIO.setup(channel, GPIO.IN)

GPIO.setup(channel, GPIO.OUT)

GPIO.setup(channel, GPIO. OUT, initial=GPIO. HIGH)

# add as many as channels as needed. You can also use tuples: (18,12,13) channels = [18, 12, 13] GPIO.setup(channels, GPIO.OUT)

5. input

GPIO.input(channel) This will return the GPIO. LOW or GPIO. HIGH.

6. output: To set the value of a pin configured as an output, please use:

GPIO.output(channel, state) where state can be GPIO.LOW or GPIO.HIGH.

channels = [18, 12, 13] # or use tuples GPIO.output(channels, GPIO.HIGH) # or GPIO.LOW # set first channel to LOW and rest to HIGH GPIO.output(channel, (GPIO.LOW, GPIO.HIGH, GPIO.HIGH))

7. clean up

At the end of the program, it's a good idea to clean up the channel so that all pins are set to their default state. To clean up all used channels, please call:

GPIO.cleanup()

GPIO.cleanup(chan1) # cleanup only chan1 GPIO.cleanup([chan1, chan2]) # cleanup only chan1 and chan2 GPIO.cleanup((chan1, chan2)) # does the same operation as previous statement

8. Jetson Board Information and Library Versions:

GPIO.JETSON_INFO

This provides a Python dictionary with the following keys: P1_REVISION, RAM, REVISION, TYPE, MANUFACTURER, and PROCESSOR. All values in the dictionary are strings, but P1_REVISION is an integer.

GPIO.VERSION

This provides a string with the XYZ version format.

9. Interrupt

In addition to polling, the library provides three additional methods to monitor input events:

GPIO.wait_for_edge(channel, GPIO.RISING)

# timeout is in milliseconds GPIO.wait_for_edge(channel, GPIO.RISING, timeout=500)

The function returns the channel on which the edge was detected, or None if a timeout occurred.

# set rising edge detection on the channel

GPIO.add_event_detect(channel, GPIO.RISING)

run_other_code()

if GPIO.event_detected(channel):

do_something()

As before, you can detect events for GPIO.RISING, GPIO.FALLING or GPIO.BOTH.

# define callback function

def callback_fn(channel):

print("Callback called from channel %s" % channel)

# add rising edge detection

GPIO.add_event_detect(channel, GPIO.RISING, callback=callback_fn)

def callback_one(channel):

print("First Callback")

def callback_two(channel):

print("Second Callback")

GPIO.add_event_detect(channel, GPIO.RISING)

GPIO.add_event_callback(channel, callback_one)

GPIO.add_event_callback(channel, callback_two)

In this case, the two callbacks run sequentially, not simultaneously, because only the thread runs all the callback functions.

# bouncetime set in milliseconds GPIO.add_event_detect(channel, GPIO.RISING, callback=callback_fn, bouncetime=200)

If edge detection is no longer needed, it can be removed as follows:

GPIO.remove_event_detect(channel)

10. Check GPIO channel functionality

This function allows you to check the functionality of the provided GPIO channels:

GPIO.gpio_function(channel) The function returns GPIO.IN or GPIO.OUT.

Turn on LED

import Jetson.GPIO as GPIO import time as time LED_Pin = 11 GPIO.setwarnings(False) GPIO.setmode(GPIO.BOARD) GPIO.setup(LED_Pin, GPIO.OUT) while (True): GPIO.output(LED_Pin, GPIO.HIGH) time.sleep(0.5) GPIO.output(LED_Pin, GPIO.LOW) time.sleep(0.5)

Demo Use

git clone https://github.com/NVIDIA/jetson-gpio

sudo mv ~/jetson-gpio

cd /opt/nvidia/jetson-gpio sudo python3 setup.py install

sudo groupadd -f -r gpio sudo usermod -a -G gpio user_name

Note: user_name is the username you use, say waveshare.

sudo cp /opt/nvidia/jetson-gpio/lib/python/Jetson/GPIO/99-gpio.rules /etc/udev/rules.d/

sudo udevadm control --reload-rules && sudo udevadm trigger

cd /opt/nvidia/jetson-gpio/samples/ sudo python3 simple_input.py

IIC

1. Install I2Ctool first and input in the terminal:

sudo apt-get update sudo apt-get install -y i2c-tools sudo apt-get install -y python3-smbus

2. Check the installation and input in the terminal:

apt-cache policy i2c-tools

If the output is as follows, the installation is successful:

i2c-tools: Installed: 4.0-2 Candidate: 4.0-2 Version list: ***4.0-2500 500 http://ports.ubuntu.com/ubuntu-ports bionic/universe arm64 Packages 100 /var/lib/dpkg/status

i2cdetect

sudo i2cdetect -y -r -a 0

sudo i2cdump -y 0 0x68

sudo i2cset -y 0 0x68 0x90 0x55

| parameters | meaning | |

| 0 | represents the I2C device number | |

| 0x68 | represents the address of the I2C device | |

| 0x90 | represents the register address | |

| 0x55 | represents the data written to the register |

| parameters | meaning | |

| 0 | represents the I2C device number | |

| 0x68 | represents the address of the I2C device | |

| 0x90 | represents the register address |