- sales/support

Google Chat:---

- sales

+86-0755-88291180

- sales01

sales@spotpear.com

- sales02

dragon_manager@163.com

- support

tech-support@spotpear.com

- CEO-Complaints

zhoujie@spotpear.com

- sales/support

WhatsApp:13246739196

RPI-HMI-080D-101D User Guide

Features

- 8/10.1inch DSI touch screen, ten-point capacitive touch control

- IPS display panel with hardware resolution of 1280×800

- Using optical bonding process to ensure clearer pictures and reduce light reflection

- Toughened glass capacitive touch panel, hardness up to 6H

- Dual 4Kp60 HDMI display output

- Integrated 5-megapixel front camera

- Supports Raspberry Pi 5/4 all-in-one installation.

- Directly drive the LCD by the DSI interface on the Raspberry Pi, with up to 60Hz refreshing rate

- Supports Bookworm system without driver when used with Raspberry Pi

- Supports software control of backlight brightness

- Aluminum alloy case with passive cooling design

- Embedded installation, compatible with VESA standard bracket installation

Parameters

| Parameter | Description |

|---|---|

| CPU | Broadcom BCM2712, quad-core Cortex-A76 (ARM v8) 64-bit SoC, clocked at 2.4GHz |

| GPU | OpenGL ES 3.1 & Vulkan 1.0 |

| CAM | Integrated 5-megapixel front camera |

| USB | 2 USB 2.0 ports, dual-layer Type A connector, each channel supporting up to 480Mbps 2 USB 3.0 ports, dual-layer Type A connector, each channel supports up to 5Gbps |

| ETH | Gigabit Ethernet |

| Wi-Fi | Dual-band 802.11ac Wi-Fi |

| BLE | Bluetooth 5.0/Bluetooth Low Energy (BLE) |

| HDMI | 2 standard HDMI ports, supporting 4K 60Hz |

| Memory | 64GB |

| Size | 8.0" / 10.1" |

| Resolution | 1280 x 800 |

| Refresh rate | 60Hz |

| Viewing angle | 178° |

| Max brightness | 300cd/㎡ |

| Color gamut | 50%NTSC |

| Backlight adjustment | Software dimming |

Electrical Specifications

| Parameters | Minimum Value | Standard Value | Maximum Value | Unit | Note |

|---|---|---|---|---|---|

| Input voltage | 4.75 | 5.00 | 5.25 | V | Note 1 |

| Input current | 5 | 5 | TBD | A | Note 2 |

| Operating temperature | 0 | 25 | 60 | ℃ | Note 3 |

| Storage temperature | -10 | 25 | 70 | ℃ | Note 3 |

•Note 1: Input voltages exceeding the maximum or improper operation may cause permanent damage to the device.

•Note 2: The input current needs to be ≥ 5A, otherwise it will cause the startup failure or display abnormality, and staying in an abnormal state for a long time may cause permanent damage to the device.

•Note 3: Please do not store the display panel in a high-temperature and high-humidity environment for a long time. The display panel should operate within its limits, otherwise it may be damaged.

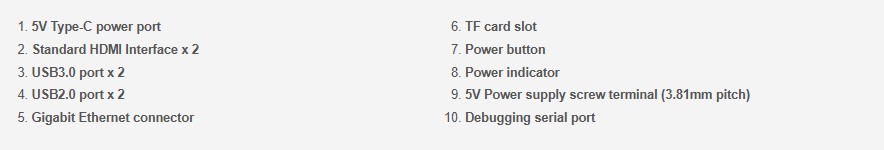

Interfaces

Assembly Guide

If you purchase the all-in-one version (PI5-HMI-080D, PI5-HMI-101D, PI4-HMI-080D, or PI4-HMI-101D), then there is no need to perform hardware assembly; you can use it directly after powering on.

The following are the hardware assembly steps for the PI5-HMI-080D:

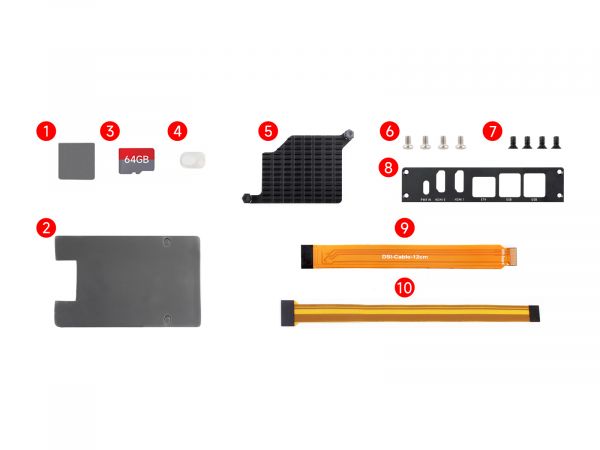

Please prepare the following accessories:

1. First, unscrew the four screws on metal back cover and remove the cover.

2. Respectively install the thermal tapes (① and ②) and heatsink (⑤) on the front and back panels of Raspberry Pi 5, and insert the matching 64GB system card (③).

3. Connect the Raspberry Pi 5 motherboard to the HAT, make sure the mounting holes of the Raspberry Pi are aligned with the four screw holes on the back of the screen, and fix them with M2.5*4mm silver metal screws.

4. Use CSI cable (⑩) to connect CSI interface to CAM/DIPS 1 interface of Raspberry Pi; Use DSI cable (⑨) to connect DSI interface to CAM/DIPS 0 interface of Raspberry Pi.

5. Install the metal front panel (⑧), fix the silicon key (④) inside the case, and fix them with KM2.5*5mm black metal screws. (PI4 and PI5 front cover hole positions are inconsistent, please distinguish them.)

6. Use M2.5*5mm black metal screws to fix the side cover.

7. Finally, install the green terminal on the rear cover and complete the hardware assembly.

Software Settings

Bookworm System Configuration

If you are using a pre-installed system card, you do not need to perform any software configuration. Insert the card and start the device. The default username is pi and the password is raspberry, which will allow you to directly enter the desktop environment.

Install the System and Driver Manually

- 1. Connect the TF card to the PC, download and use Raspberry Pi Imager to burn the corresponding system image.

- 2. After the flashing is completed, find the config.txt file in the root directory of the TF card, open it and add the following code at the end of the file, save and eject the TF card safely.

For Pi 4

dtoverlay=ov5647

dtoverlay=vc4-kms-dsi-waveshare-panel,10_1_inchFor Pi 5

dtoverlay=ov5647

dtoverlay=vc4-kms-dsi-waveshare-panel,10_1_inch,dsi0- 3. Insert the TF card into the Raspberry Pi, the device will automatically recognize the display and touch screen after powering on, and you can use it normally.

Buster and Bullseye System Setting

Method 1: Install the Driver Manually

1. Download the latest version of the image from the Raspberry Pi official website, download the compressed file to your PC, and extract it as .img file.

2. Connect the TF card to the PC, format the TF card with SDFormatter software.

3. Open the Win32DiskImager software, select the system image prepared in step 1, and click Write to burn the system image.

4. After the burning is completed, connect the TF card to the Raspberry Pi, start the Raspberry Pi, and log in to the terminal of the Raspberry Pi (you can connect the Raspberry Pi to the HDMI display or use ssh to log in remotely).

#Step 1: Download and enter the Waveshare-DSI-LCD driver folder git clone https://github.com/waveshare/Waveshare-DSI-LCD cd Waveshare-DSI-LCD

#Step 2: Enter uname -a on the terminal to view the kernel version, and cd it to the corresponding file directory #6.1.21 then run the following command cd 6.1.21

#Step 3: Please determine the bits of the current system you are using. For a 32-bit system, proceed to the 32 directory, and for a 64-bit system, proceed to the 64 directory cd 32 #cd 64

#Step 4: Run the screen driver sudo bash ./WS_xinchDSI_MAIN.sh 101C I2C0

#Step 5: Wait for a few seconds, when the driver installation is complete and no error is displayed, restart and load the DSI driver to use it normally sudo reboot #Note: The steps above require that the Raspberry Pi can connect to the internet normally

5. Wait for the system to reboot, it will be able to display and touch normally.

Method 2: Burn the Pre-installed Image

1. Select your corresponding Raspberry Pi version image, download and unzip it as .img file.

Raspberry Pi 4B/CM4 version download: Waveshare DSI LCD - Pi4 pre-installed image

2. Connect the TF card to the PC, format the TF card with SDFormatter software.

3. Open the Win32DiskImager software, select the system image prepared in step 1, and click Write to burn the system image.

4. After the burning is completed, open the config.txt file in the root directory of the TF card, add the following code under [all], save and safely eject the TF card.

dtoverlay=WS_xinchDSI_Screen,SCREEN_type=8,I2C_bus=10

dtoverlay=WS_xinchDSI_Touch,invertedx,swappedxy,I2C_bus=105. Start the Raspberry Pi, and the screen and touch function should work normally 30 seconds later.Adjusting Backlight Brightness

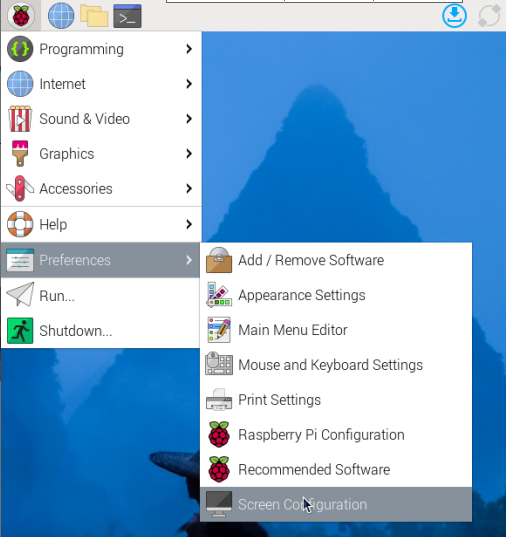

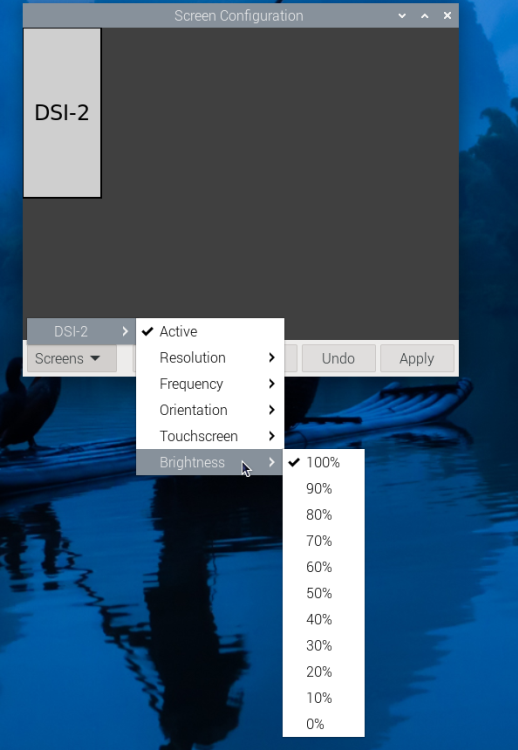

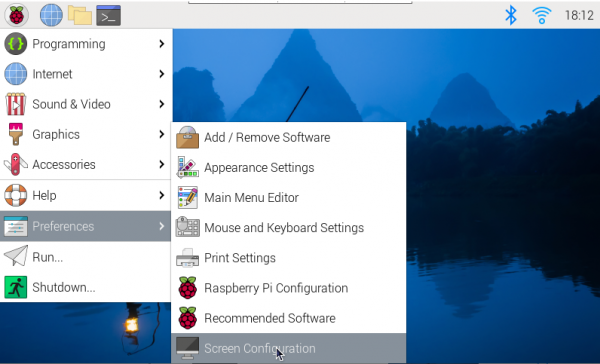

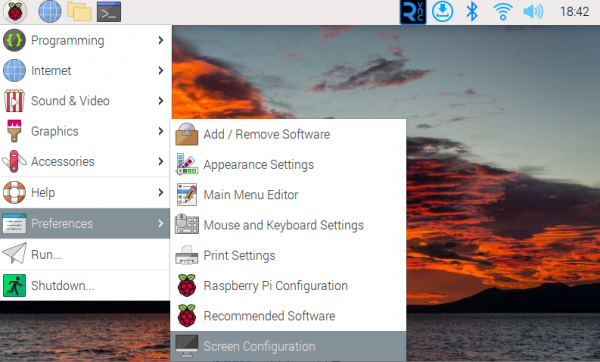

- 1. Open the "Screen Configuration" application;

- 2. Go to "Screen" -> "DSI-2" -> "Brightness", check the backlight brightness you need to set, and finally click "Apply" to complete the backlight setting.

Waveshare also provides a corresponding demo (the demo is only used for Bookworm and Bullseye systems), users can download, install and use in the following way:

wget https://files.waveshare.com/wiki/common/Brightness.zip unzip Brightness.zip cd Brightness sudo chmod +x install.sh ./install.sh

After the installation is completed, you can open the demo in the Start Menu -> Accessories -> Brightness, as shown below:

In addition, you can also control the brightness of the backlight by entering the following command on the terminal:

echo X | sudo tee /sys/class/backlight/*/brightness

Where X represents any number from 0 to 255. 0 means the darkest backlight, and 255 means the brightest backlight. For example:

echo 100 | sudo tee /sys/class/backlight/*/brightness echo 0 | sudo tee /sys/class/backlight/*/brightness echo 255 | sudo tee /sys/class/backlight/*/brightness

Display Rotation

Bookworm Display Rotation

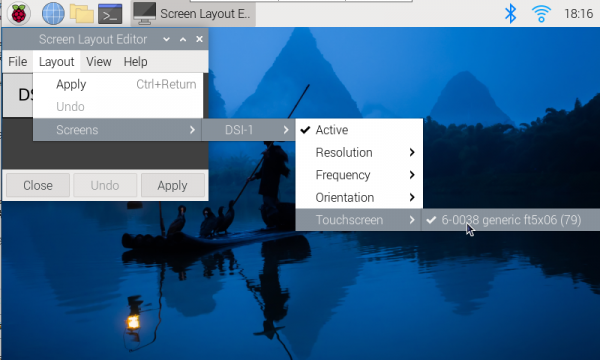

GUI interface rotation

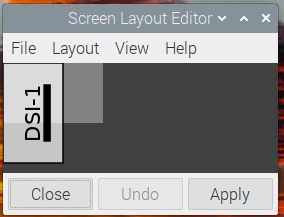

- 1. Open the "Screen Configuration" application;

- 2. Go to "Screen" - > "DSI-1" - > "Touchscreen" and check "6-0038 generic ft5x06(79)"

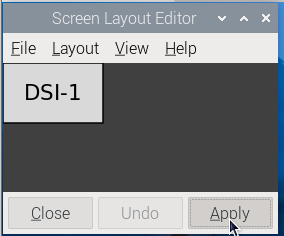

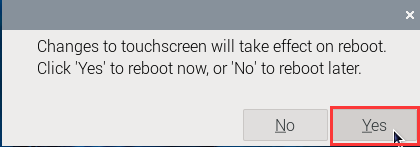

- 3. Click "Apply" and close the current window. Restart according to the pop-up prompt, and then touch screen select Done.

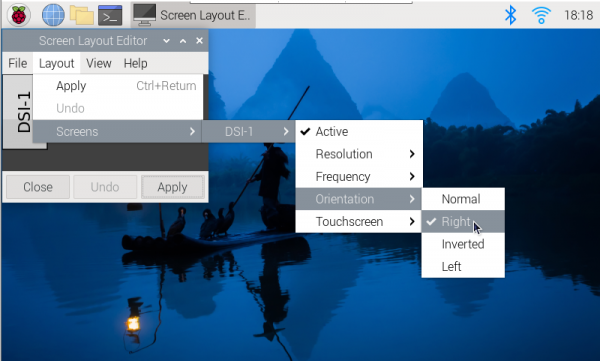

- 4.Go to "Screen" -> "DSI-1" -> "Orientation", check the direction you need to rotate, and finally click "Apply" to complete the display and touch synchronous rotation.

-->

-->

Note: Only the Bookworm system supports the above synchronization rotation method. For the Bullseye and Butser systems, manual separate settings for touch rotation are required after displaying the rotation.

lite version display rotation

- 1. Need to modify the /boot/cmdline.txt file:

sudo nano /boot/cmdline.txt

- 2. At the beginning of the cmdline.txt file, add the command for displaying the rotation angle: (Please note, this command needs to be on the same line, with each command separated by a space.)

video=DSI-1:800x480M@60,rotate=90

Change the display rotation angle by modifying the rotate value to 90, 180, or 270.

- 3. Save and restart

sudo reboot

Bullseye/Buster Display Rotation

GUI interface rotation

- 1. Open the "Screen Configuration" application;

- 2.Go to Screen > DSI-1 - - > Orientation, check the direction you need to rotate, and finally click Apply to complete the display rotation.

lite version display rotation

- 1. Need to modify the /boot/cmdline.txt file:

sudo nano /boot/cmdline.txt

- 2. At the beginning of the cmdline.txt file, add the command for displaying the rotation angle: (Please note, this command needs to be on the same line, with each command separated by a space.)

video=DSI-1:800x480M@60,rotate=90

Change the display rotation angle by modifying the rotate value to 90, 180, or 270.

- 3. Save and restart

sudo reboot

Stretch/Jessie Display Rotation

For some older systems, they do not use the vc4-kms-v3d or vc4-fkms-v3d driver modes, and the display rotation method is as follows

- 1. Add a statement to the config.txt file (the config file is located in the root directory of the TF card, i.e., in the /boot folder):

display_rotate=1 #1:90;2: 180; 3: 270

- 2. Save the changes and then reboot the Raspberry Pi

sudo reboot

Touch Rotation

Bookworm Touch

If you use the graphical interface for rotation, you can tick "Touchscreen" in the screen layout editor window to synchronize the touch rotation. Please refer to the previous introduction for how to rotate the screen. For the command line rotation method, please refer to the following text:

1. Create a new file named 99-waveshare-touch.rules

sudo nano /etc/udev/rules.d/99-waveshare-touch.rules

2. Add the following lines as needed:

#90°:

ENV{ID_INPUT_TOUCHSCREEN}=="1", ENV{LIBINPUT_CALIBRATION_MATRIX}="0 -1 1 1 0 0"

#180°:

#ENV{ID_INPUT_TOUCHSCREEN}=="1", ENV{LIBINPUT_CALIBRATION_MATRIX}="-1 0 1 0 -1 1"

#270°:

#ENV{ID_INPUT_TOUCHSCREEN}=="1", ENV{LIBINPUT_CALIBRATION_MATRIX}="0 1 0 -1 0 1"

3. Save and reboot

sudo reboot

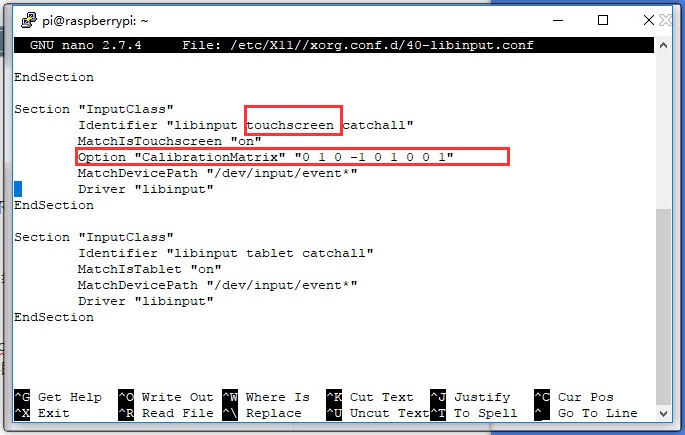

Bullseye/Buster Touch

After some systems display rotation, the touch direction is inconsistent, and you need to perform the following operations to perform touch rotation:

1. Install libinput

sudo apt-get install xserver-xorg-input-libinput

If you have Ubuntu or Jetson Nano installed. The installation instruction is

sudo apt install xserver-xorg-input-synaptics

2. Create the xorg.conf.d directory under /etc/X11 (if the directory already exists, proceed directly to step 3).

sudo mkdir /etc/X11/xorg.conf.d

3. Copy the 40-libinput-conf file to the newly created directory

sudo cp /usr/share/X11/xorg.conf.d/40-libinput.conf /etc/X11/xorg.conf.d/

4. Edit the file

sudo nano /etc/X11/xorg.conf.d/40-libinput.conf

#Find the touchscreen section, add the corresponding rotation angle command in it, and save it #90° Right touch rotation: Option "CalibrationMatrix" "0 1 0 -1 0 1 0 0 1" #180° Inverted touch rotation: #Option "CalibrationMatrix" "-1 0 1 0 -1 1 0 0 1" #270° Left touch rotation: #Option "CalibrationMatrix" "0 -1 1 1 0 0 0 0 1" Similar to the position in the following image:

5. Reboot Raspberry Pi

sudo reboot

After completing the above steps, touching will cause a rotation.

Remote Login Raspberry Pi

Configure Wi-Fi

- On Raspberry Pi devices that support the 5GHz band (e.g. Pi 3B+, Pi 4, CM4, Pi 400), you need to set the country code before you can use the Wi-Fi network normally.

1. Open the terminal, input sudo raspi-config, select Localisation Options > WLAN Country (set the country code), press Enter.

2. Choose your country from the list (e.g., China would be CN), confirm by clicking OK. (If you don't know your country code, you can find it at wikipedia)

3. Go back to the main interface, press Finish, the system will prompt to restart, select Yes, and wait for the Raspberry Pi to restart.

4. After completion, you'll be able to connect to the Wi-Fi network on your Raspberry Pi and enter the password to complete the connection.

Find Device IP

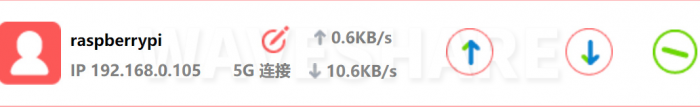

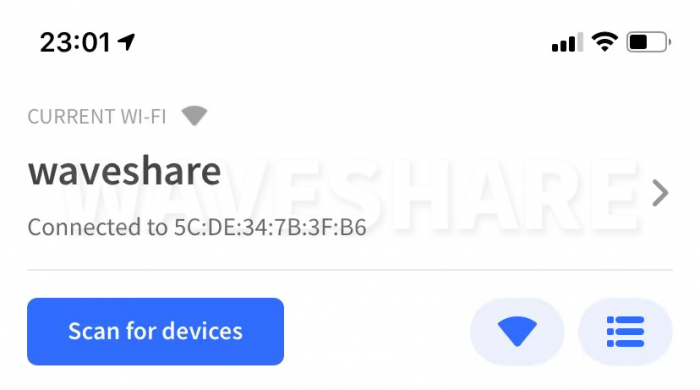

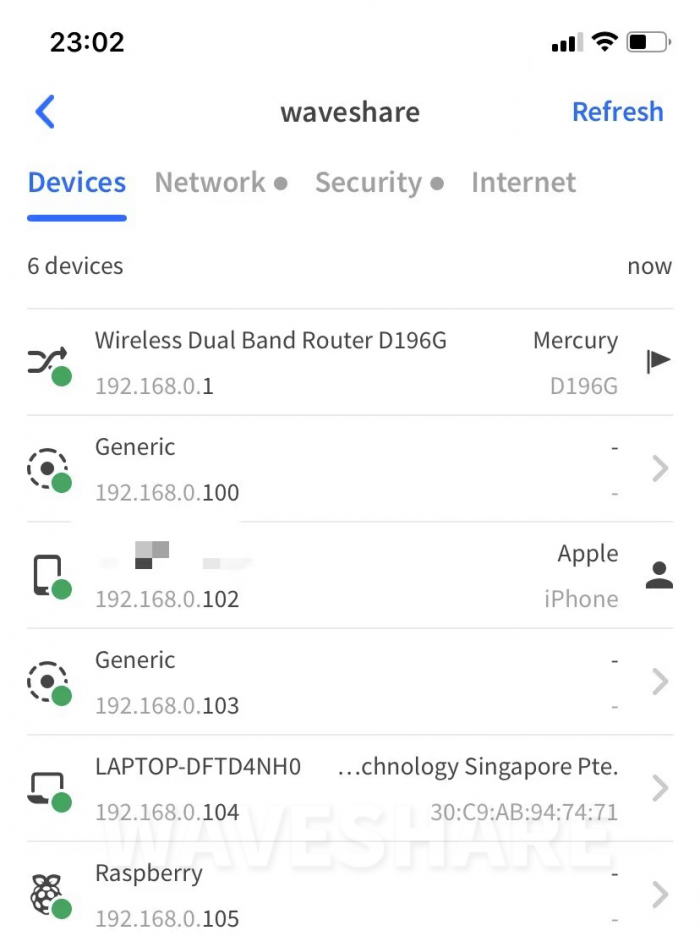

Obtaining the IP address of the Raspberry Pi is a prerequisite for remote login, please refer to the following steps after configuring the WIFI or connecting the network cable.

View IP Address on Desktop

If you use a desktop operating system, hovering the mouse over the network icon in the upper right corner will display the name of the current network and the IP address.

Login Router View

- By logging in to the router's management interface, you can view the devices connected to the network and their IP addresses.

Use Mobile Applications to Obtain Addresses (Apple devices only)

- Search for the Fing application in the app store. It is a free network scanner for smartphones that scans the current network and lists all devices with their IP addresses.

- Note: Your phone and Raspberry Pi must be on the same network, so please connect your phone to the correct wireless network.

- When opening the Fing application, touch the device scan in the upper left corner of the screen.

- In a few seconds, you will get a list of device connections, which includes all devices connected to the network.

- At this point, we have successfully obtained the Raspberry Pi's IP address: 192.168.0.105.

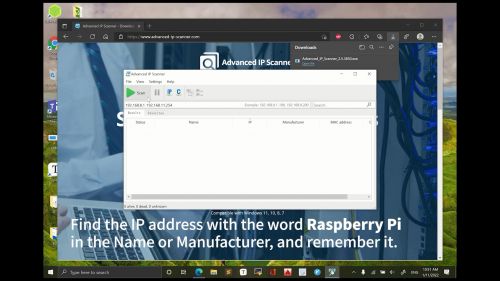

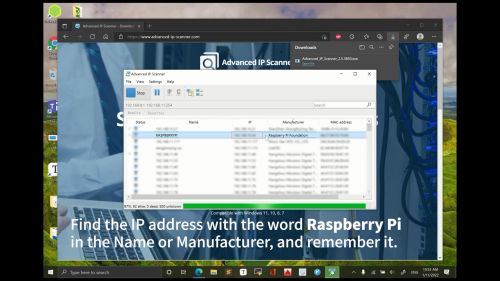

Use Advanced IP Scanner

- You can use some LAN IP scanning tools, here Advanced IP Scanner is used as an example

- Run Advanced IP Scanner

- Click the Scan button to scan the IP address in the current LAN

- Find all IP addresses in Manufacturer that contain the phrase "Raspberry Pi" and record it

- Power on the device and ensure it is connected to the network

- Click the Scan button again to scan the IP address in the current LAN

- Excluding all previously recorded IP addresses with the word Raspberry Pi in the Manufacturer, all that is left is your Raspberry Pi IP address

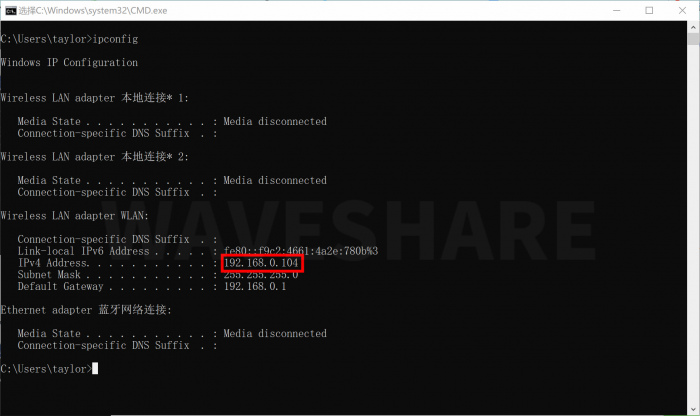

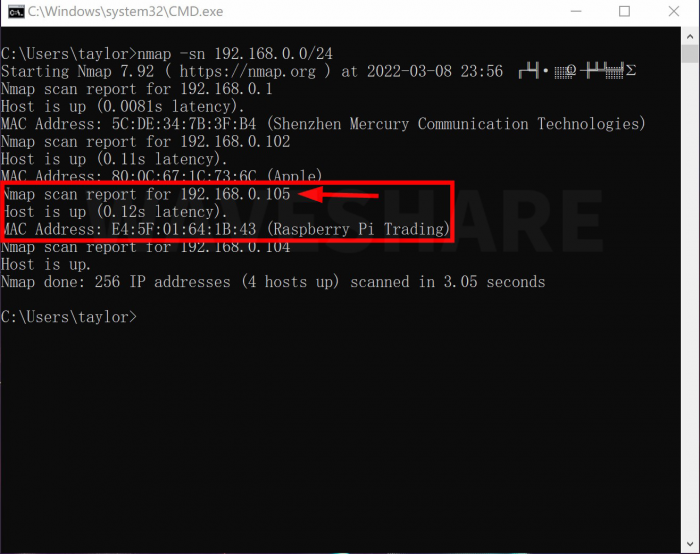

Use nmap Command to Obtain

- Download and install nmap, otherwise the computer will not recognize the command.

- Press and hold the keyboard WIN+R, type cmd, and open the terminal.

- Before using the nmap command, we need to know the IP address of our computer. Only through the IP address of the computer can we know the IP network segment, and then use nmap to scan the devices under this network segment.

ipconfig

- If your IP address is 192.168.1.5, then the addresses of other devices will be within this 192.168.1.0/24 subnet range (which covers 192.168.1.0 to 192.168.1.255).

- My computer is 192.168.0.104. Now use the nmap -sn command with flag (ping scan) on the entire subnet range. -This might take a few seconds:

nmap -sn 192.168.0.0/24

- At this point, we have successfully obtained the Raspberry Pi's IP address: 192.168.0.105.

Enable SSH and VNC

- Open the Raspberry Pi terminal, input raspi-config to enter the settings interface, and select Interface Options.

- Go to the Interfaces tab.

- Select Enable next to SSH.

- Click OK.

- Restart the Raspberry Pi.

VNC is the same, refer to the above steps for configuration.

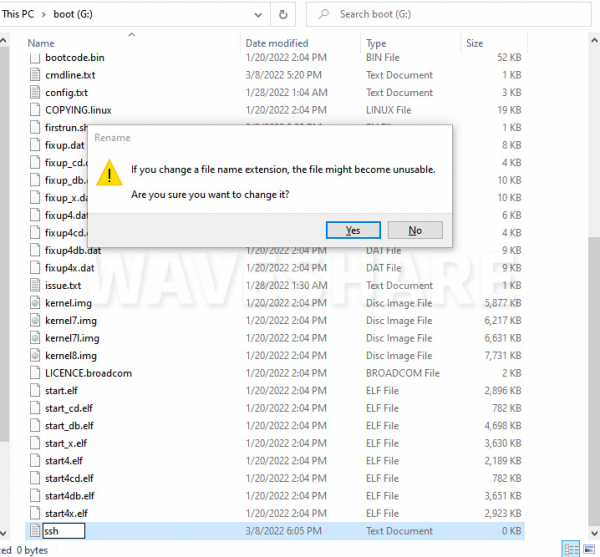

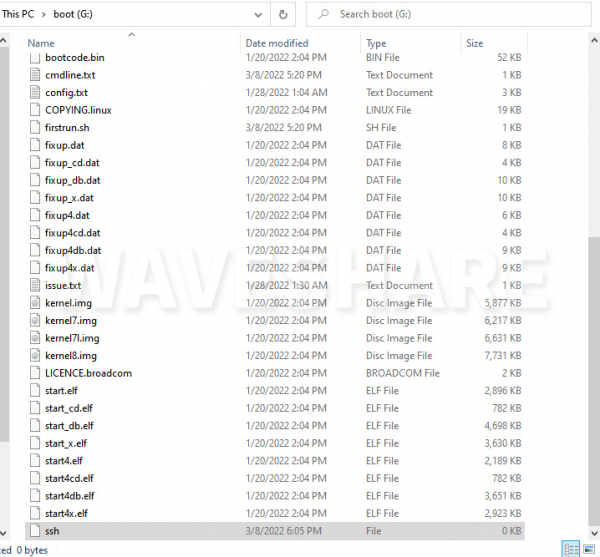

- Create a new file named ssh under the boot drive letter, without any extension. You can create a new file named ssh.txt, make sure the folder options display the extension, and then delete the .txt file. This way, you will have a file named ssh without any extension.

Remote Login

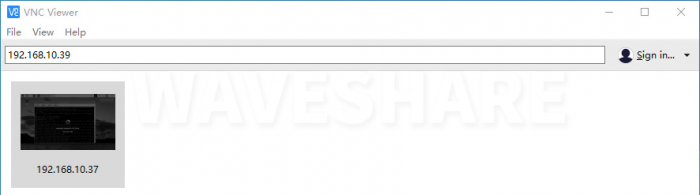

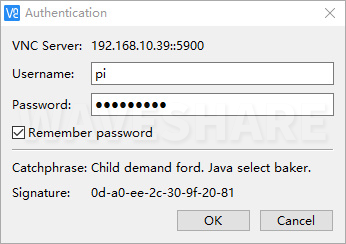

VNC Login

Please make sure that the Raspberry Pi and your computer are under the same router or network segment and follow the steps above to enable the VNC login function.

- Download and open the remote login software VNC-Viewer

- Open VNC and enter the IP address of the Raspberry Pi:

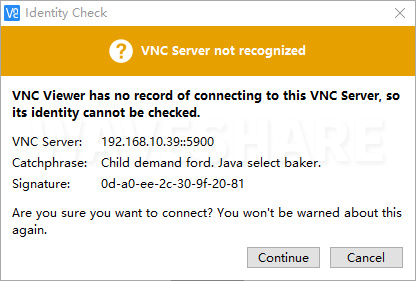

- Select Continue:

- Username: pi; Password: raspberry:

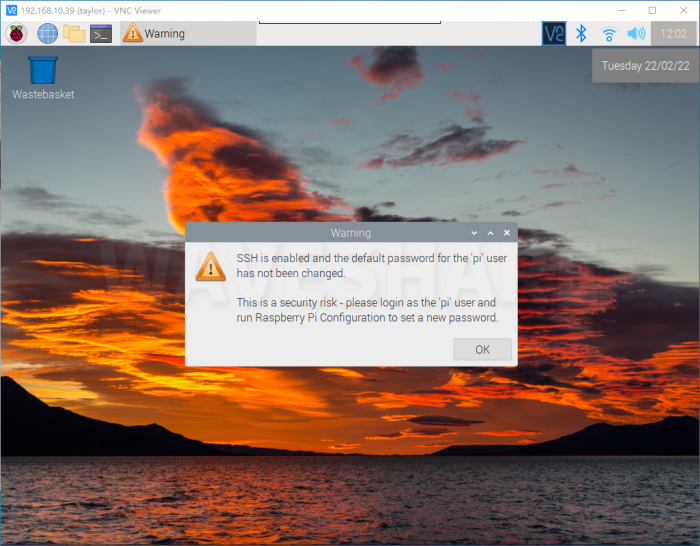

- Here the login is successful:

SSH Login

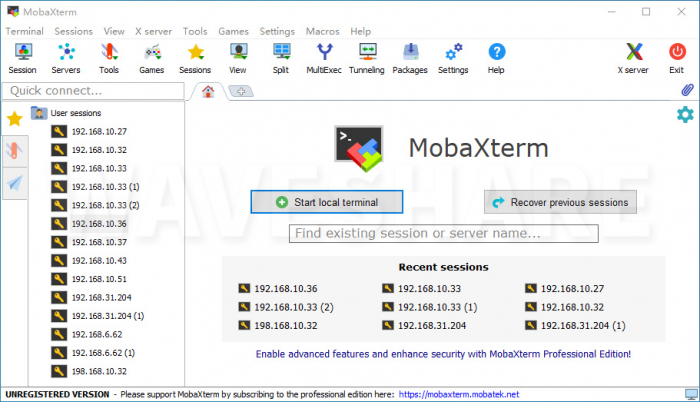

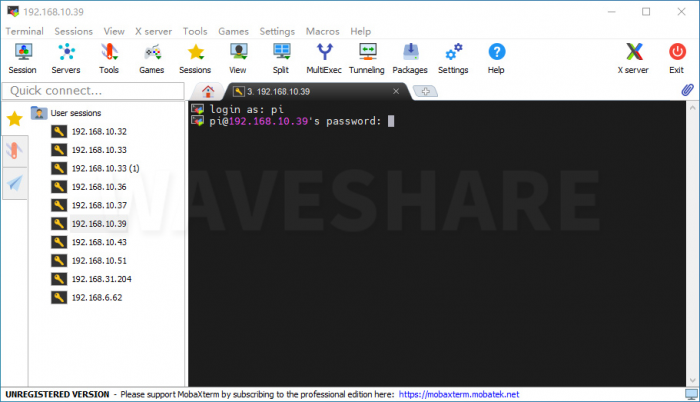

Use MobaXterm Software

Please make sure that the Raspberry Pi and your computer are under the same router or network segment and follow the steps above to enable the SSH login function.

- Open remote login software MobaXterm:

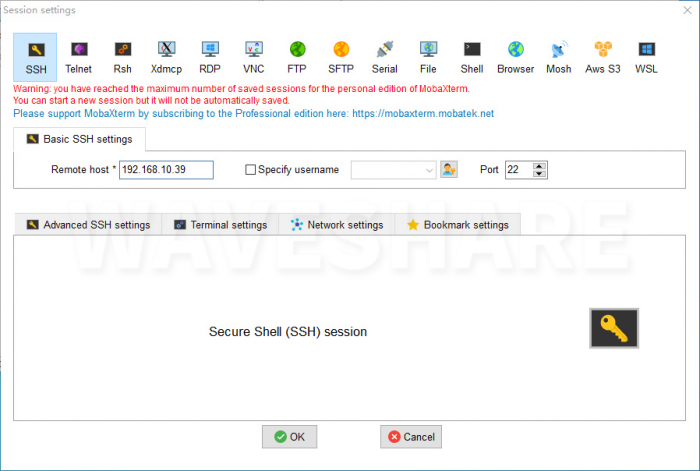

- Click session, click ssh, and enter the IP address of the Raspberry Pi in Remote host:

- The login name is pi and the password is raspberry:

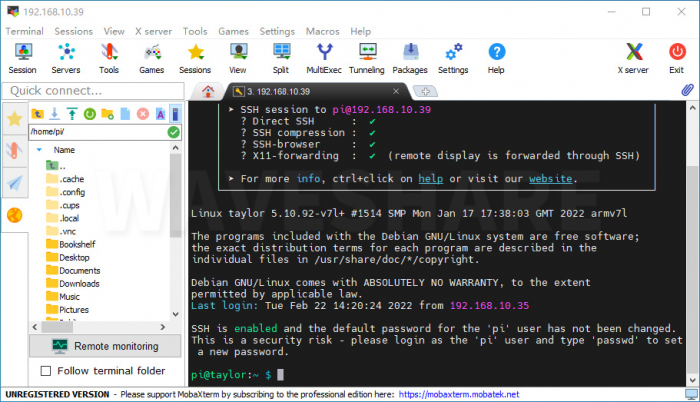

- Here the login is successful:

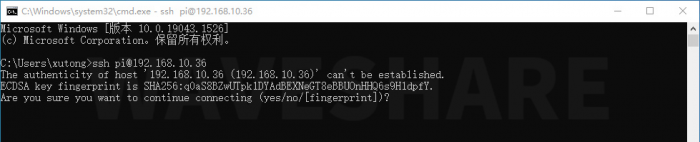

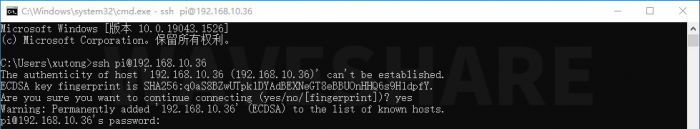

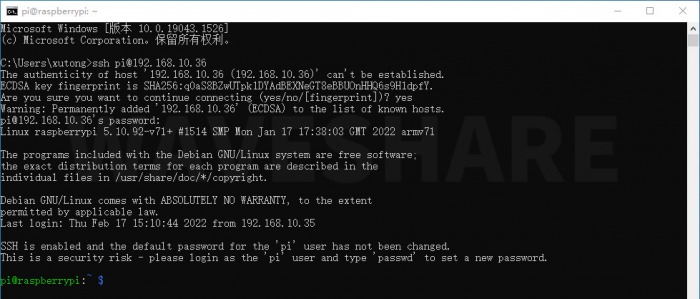

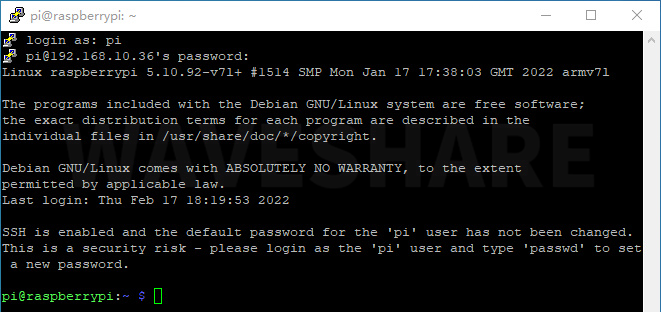

Use Windows SSH Login Command

- Press the Win key and R key at the same time, input cmd, then press Enter to open the command line

- Assume the obtained address is 192.168.10.36, the command should be:

ssh pi@192.168.10.36

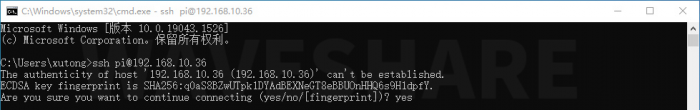

- Enter Yes to confirm:

- Enter the default password for the Raspberry Pi: raspberry:

Note: It is normal for the screen to remain unchanged when entering the password. After entering the password, press Enter to access the Raspberry Pi:

Note: It is normal for the screen to remain unchanged when entering the password. After entering the password, press Enter to access the Raspberry Pi: - Here the SSH login is successful:

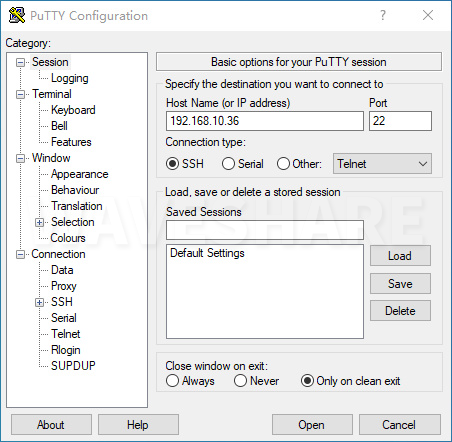

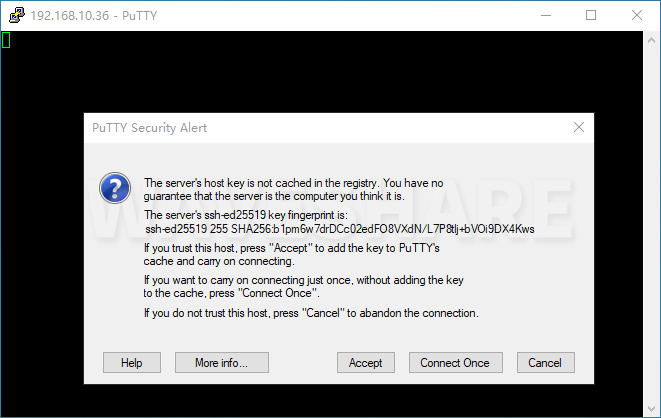

PUTTY Login

- Open the corresponding flashing tool putty, click on session, and enter the Raspberry Pi's IP address in the IP address field:

- Select Accept:

- The login name is pi and the password is raspberry:

- Here the login is successful:

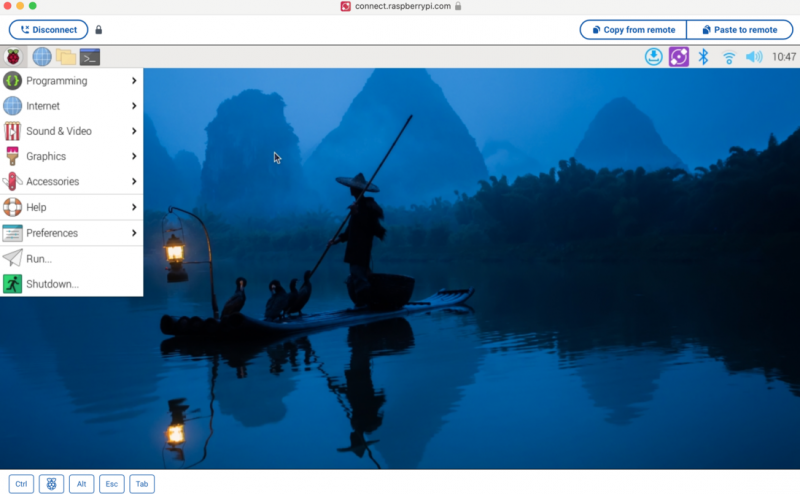

Raspberry Pi Connect Login

This feature requires the Wayland window server. Raspberry Pi OS Bookworm and later versions use Wayland by default. Screen sharing is not compatible with Raspberry Pi OS Lite or systems using X Window Server.

Install Connect

If you don't have Connect installed on your Raspberry Pi OS version, open a terminal window. Run the following command to update your system and software packages:

sudo apt update sudo apt full-upgrade

Run the following command on Raspberry Pi to install Connect:

sudo apt install rpi-connect

Start Connect

Once installed, use the rpi-connect command line interface to start Connect for the current user:

rpi-connect on

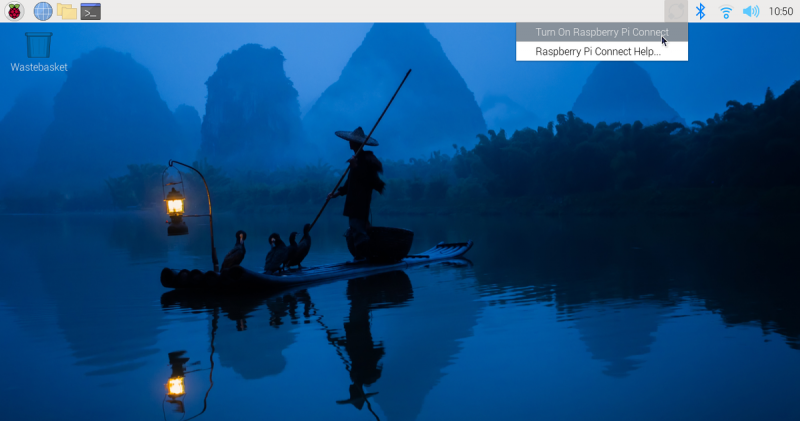

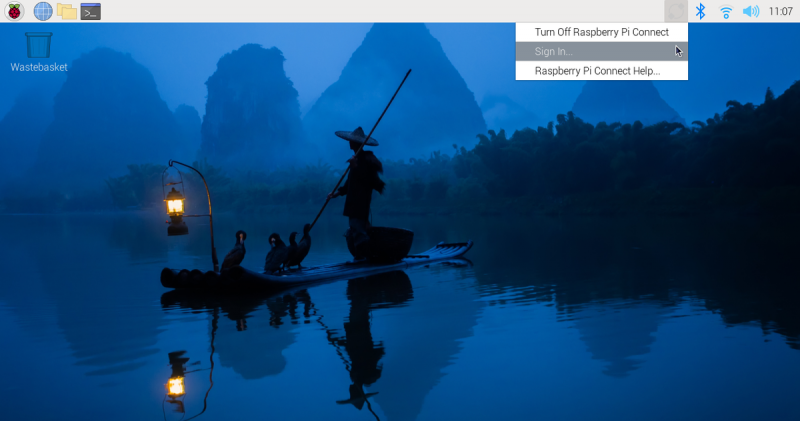

Alternatively, click the "Connect" icon in the menu bar to open the drop-down menu and select "Open Raspberry Pi Connection":

To stop Connect, run the following command:

rpi-connect off

Associate Raspberry Pi Device with Connect Account

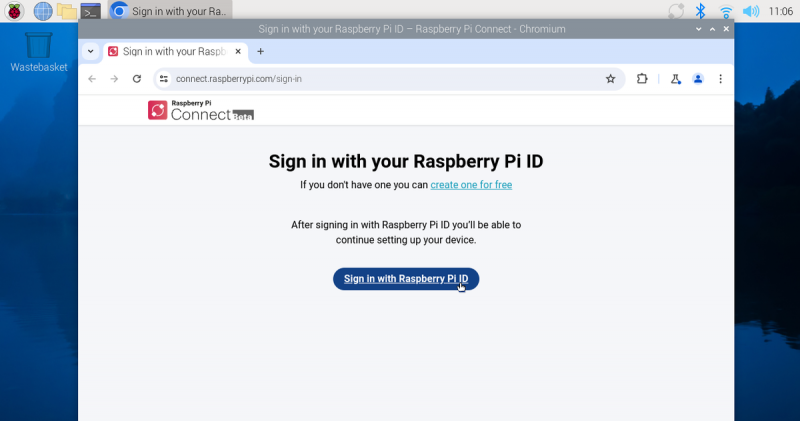

Now you have installed and started Connect on your Raspberry Pi device, you must associate your device with a Connect account.

If you use the Connect plugin in the menu bar, the first time you click "Open Raspberry Pi Connect" to open the browser, it will prompt you to log in using your Raspberry Pi ID:

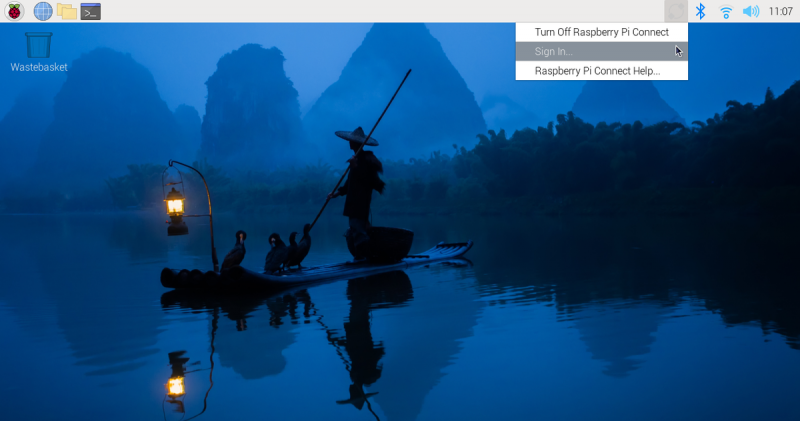

Or, select "Log in..." from the drop-down menu:

If you don't have a Raspberry Pi ID yet, please click here to create one for free.

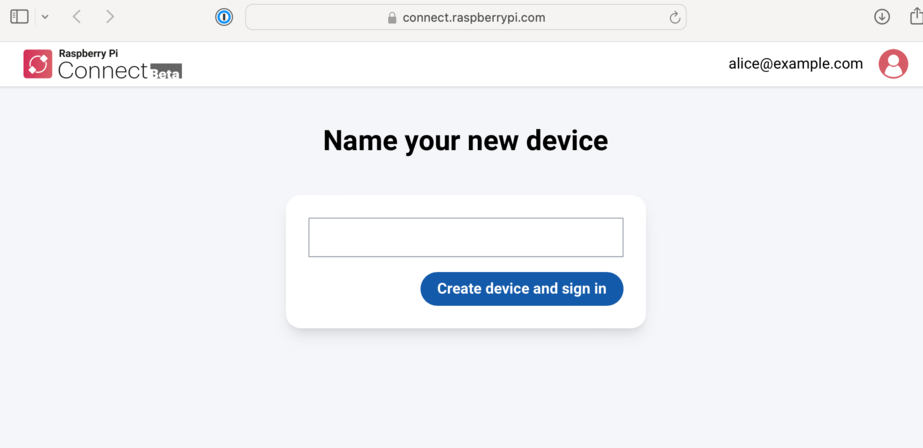

Complete the Connection of Raspberry Pi

After verification, assign a name to your device. Select a name that uniquely identifies the device. Click the "Create Device and Login" button to continue.

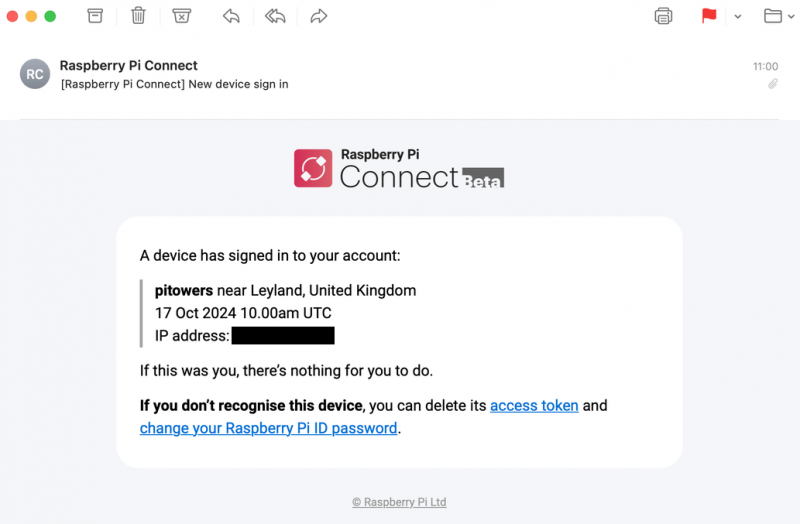

You can now connect to your device remotely. The Connect icon in the menu bar will turn blue to indicate that your device is now logged into the Connect service. You should receive an email notification indicating that a new device has been linked to your Connect account.

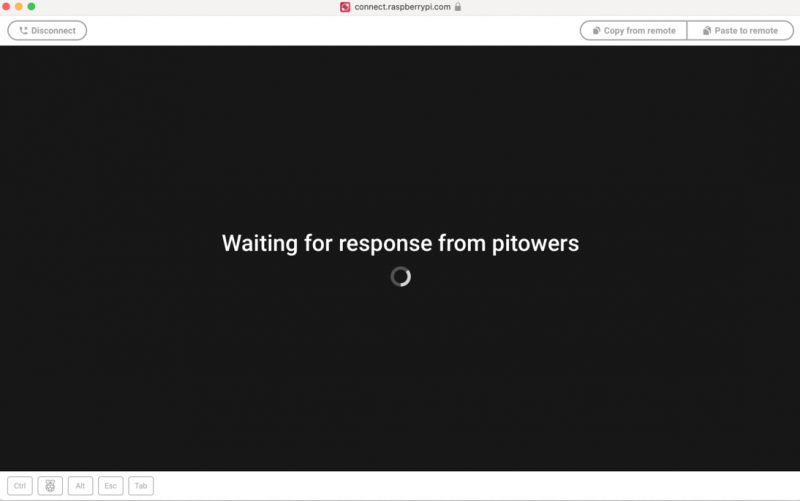

Access Your Raspberry Pi Device

Now your device is displayed on the Connect interface, and you can access your device from anywhere using a browser. Connect provides multiple ways to remotely interact with your devices.

- Access connect.raspberrypi.com on any computer.

- Connect redirects you to the Raspberry Pi ID service to log in. After logging in, Connect will display a list of connected devices. Devices that can be used for screen sharing will display a gray screen sharing badge below the device name.

- Click the "Connect via" button to the right of the device you would like to access. Select the "Screen sharing" option from the menu. This opens a browser window that displays your device's desktop.

- You can now use your device as you would locally. For more information about the connection, hover your mouse over the padlock icon immediately to the right of the "Disconnect" button.

For more information, please refer to Introduction to Raspberry Pi's official website。

Use the Camera

In the Raspberry Pi OS Bookworm, the camera capture application has been changed from libcamera-* to rpicam-*. Although the old version of libcamera is still supported, this component will be deprecated in the future. To ensure the long-term compatibility of the system, it is recommended to migrate to rpicam as soon as possible.

Note: The default system shipped is Raspberry Pi OS Bookworm, and users should use rpicam-* for camera management. If you are using a Raspberry Pi OS Bullseye system, please refer to the link below to configure it using the tutorial related to libcamera-*.

Rpicam

When running the latest version of Raspberry Pi OS, rpicam-apps already has five basic features installed. In this case, the official Raspberry Pi camera will also be detected and automatically enabled.

You can check if everything is working by inputting the following:

rpicam-hello

You should see a camera preview window for about five seconds.

Users of Bullseye's Raspberry Pi 3 or earlier devices will need to enable Glamor in order for this example script using X Windows to work. Input the command sudo raspi-config in the terminal window, then select Advanced Options, Glamor, and Yes. Exit and restart your Raspberry Pi. By default, Raspberry Pi 3 and earlier devices running Bullseye may not be using the correct display driver. Please refer to the /boot/firmware/config.txt file and make sure that dtoverlay=vc4-fkms-v3d or dtoverlay=vc4-kms-v3d is currently active. If you need to change this setting, please restart.

rpicam-hello

rpicam-hello -t 0

- Tuning file

The libcamera for Raspberry Pi has tuning files for each type of camera module. The parameters in the file are passed to the algorithm and hardware to produce the best quality image. libcamera can only automatically determine the image sensor being used, not the entire module, even if the entire module affects the "tuning". As a result, it is sometimes necessary to override the default tuning file for a particular sensor.

For example, a sensor without an infrared filter (NoIR) version requires a different AWB (white balance) setting than the standard version, so an IMX219 NoIR used with a Pi 4 or earlier device should operate as follows:

rpicam-hello --tuning-file /usr/share/libcamera/ipa/rpi/vc4/imx219_noir.json

The Raspberry Pi 5 uses different tuning files in different folders, so here you will use:

rpicam-hello --tuning-file /usr/share/libcamera/ipa/rpi/pisp/imx219_noir.json

This also means that users can copy existing tuning files and modify them according to their preferences, as long as the parameter --tuning-file points to the new version.

The --tuning-file parameter is applicable to all rpicam-apps just like other command-line options.

rpicam-jpeg

rpicam-jpeg is a simple static image capture application.

To capture a full-resolution JPEG image, use the following command. This will display a preview for approximately five seconds, then capture the full-resolution JPEG image to the file test.jpg.

rpicam-jpeg -o test.jpg

The -t <duration> option is used to change the duration of the preview display, and the --width and --height options will alter the resolution of the captured static images. For example:

rpicam-jpeg -o test.jpg -t 2000 --width 640 --height 480

- Exposure control

All of this rpicam-apps allow the user to run the camera with a fixed shutter speed and gain. Capture an image with an exposure time of 20ms and a gain of 1.5x. This gain will be used as an analog gain within the sensor until it reaches the maximum analog gain allowed by the core sensor driver. After that, the rest will be used as a digital gain

rpicam-jpeg -o test.jpg -t 2000 --shutter 20000 --gain 1.5

The AEC/AGC algorithm for the Raspberry Pi enables application-defined exposure compensation, allowing images to be made darker or brighter through a specified number of stops.

rpicam-jpeg --ev -0.5 -o darker.jpg rpicam-jpeg --ev 0 -o normal.jpg rpicam-jpeg --ev 0.5 -o brighter.jpg

- Digital gain

The digital gain is applied by the ISP, not by the sensor. The digital gain will always be very close to 1.0 unless:

- The total gain requested (via the option --gain or via the exposure profile in the camera adjustment) exceeds the gain that can be used as an analog gain within the sensor. Only the extra gain required will be used as a digital gain.

- One of the color gains is less than 1 (note that the color gain is also used as a digital gain). In this case, the published digital gain will stabilize at 1 / min (red gain, blue gain). This means that one of the color channels (instead of the green channel) has a single-digit gain applied.

- AEC/AGC is changing. When AEC/AGC moves, the digital gain typically changes to some extent in an attempt to eliminate any fluctuations, but it quickly returns to its normal value.

rpicam-still

It emulates many features of the original app raspistill.

rpicam-still -o test.jpg

- Encoder

rpicam-still allows files to be saved in a number of different formats. It supports PNG and BMP encoding. It also allows the file to be saved as a binary dump of RGB or YUV pixels with no encoding or file format. In the latter case, the application that reads the file must be aware of its own pixel arrangement.

rpicam-still -e png -o test.png rpicam-still -e bmp -o test.bmp rpicam-still -e rgb -o test.data rpicam-still -e yuv420 -o test.data

Note: The format in which the image is saved depends on the -e (equivalent to encoding) option and is not automatically selected based on the output file name.

- Raw image capture

The raw image is an image that is produced directly by an image sensor, before any processing of it by the ISP (Image Signal Processor) or any CPU core. For color image sensors, these are usually Bayer format images. Note that the original image is very different from the processed but unencoded RGB or YUV images we saw before.

Get the raw image:

rpicam-still --raw --output test.jpg

Here, the -r option (also known as raw) indicates capturing the raw image and JPEG. In fact, the original image is the raw image that generates a JPEG. The original images are saved in DNG (Adobe Digital Negative) format and are compatible with many standard applications such as draw or RawTherapee. The original image is saved to a file with the same name but an extension of .ng, thus becoming test.dng.

These DNG files contain metadata related to image capture, including black level, white balance information, and the color matrix used by the ISP to generate JPEGs. This makes these DNG files more convenient to use for "manual" original conversion with some of the above tools in the future. Use exiftool to display all metadata encoded into a DNG file:

File Name : test.dng Directory : . File Size : 24 MB File Modification Date/Time : 2021:08:17 16:36:18+01:00 File Access Date/Time : 2021:08:17 16:36:18+01:00 File Inode Change Date/Time : 2021:08:17 16:36:18+01:00 File Permissions : rw-r--r-- File Type : DNG File Type Extension : dng MIME Type : image/x-adobe-dng Exif Byte Order : Little-endian (Intel, II) Make : Raspberry Pi Camera Model Name : /base/soc/i2c0mux/i2c@1/imx477@1a Orientation : Horizontal (normal) Software : rpicam-still Subfile Type : Full-resolution Image Image Width : 4056 Image Height : 3040 Bits Per Sample : 16 Compression : Uncompressed Photometric Interpretation : Color Filter Array Samples Per Pixel : 1 Planar Configuration : Chunky CFA Repeat Pattern Dim : 2 2 CFA Pattern 2 : 2 1 1 0 Black Level Repeat Dim : 2 2 Black Level : 256 256 256 256 White Level : 4095 DNG Version : 1.1.0.0 DNG Backward Version : 1.0.0.0 Unique Camera Model : /base/soc/i2c0mux/i2c@1/imx477@1a Color Matrix 1 : 0.8545269369 -0.2382823821 -0.09044229197 -0.1890484985 1.063961506 0.1062747385 -0.01334283455 0.1440163847 0.2593136724 As Shot Neutral : 0.4754476844 1 0.413686484 Calibration Illuminant 1 : D65 Strip Offsets : 0 Strip Byte Counts : 0 Exposure Time : 1/20 ISO : 400 CFA Pattern : [Blue,Green][Green,Red] Image Size : 4056x3040 Megapixels : 12.3 Shutter Speed : 1/20

We have noticed that there is only one calibration light source (determined by the AWB algorithm, although it is always labeled as "D65"), and dividing the ISO number by 100 gives the analog gain being used.

- Ultra-long exposure

In order to capture long-exposure images, disable AEC/AGC and AWB, as these algorithms will force the user to wait many frames while converging.

The way to disable them is to provide explicit values. Additionally, the immediate option can be used to skip the entire preview phase that is captured.

Therefore, to perform an exposure capture of 100 seconds, use:

rpicam-still -o long_exposure.jpg --shutter 100000000 --gain 1 --awbgains 1,1 --immediate

For reference, the maximum exposure times of the three official Raspberry Pi cameras can be found in this table.

rpicam-vid

rpicam-vid can help us capture video on our Raspberry Pi device. Rpicam-vid displays a preview window and writes the encoded bitstream to the specified output. This will produce an unpacked video bitstream that is not packaged in any container format (such as an mp4 file).

- rpicam-vid uses H.264 encoding

For example, the following command writes a 10-second video to a file named test.h264:

rpicam-vid -t 10s -o test.h264

You can use VLC and other video players to play the result files:

VLC test.h264

On the Raspberry Pi 5, you can output directly to the MP4 container format by specifying the MP4 file extension of the output file:

rpicam-vid -t 10s -o test.mp4

- Encoder

rpicam-vid supports dynamic JPEG as well as uncompressed and unformatted YUV420:

rpicam-vid -t 10000 --codec mjpeg -o test.mjpeg rpicam-vid -t 10000 --codec yuv420 -o test.data

The codec option determines the output format, not the extension of the output file.

The segment option splits the output file into segments-sized chunks (in milliseconds). By specifying extremely short segments (1 millisecond), this allows for the convenient decomposition of a moving JPEG stream into individual JPEG files. For example, the following command combines a 1 millisecond segment with a counter in the output filename to generate a new filename for each segment:

rpicam-vid -t 10000 --codec mjpeg --segment 1 -o test%05d.jpeg

- Capture high frame rate video

To minimize frame loss for high frame rate (> 60fps) video, try the following configuration adjustments:

- Set the target level of H.264 to 4.2 with the parameter --level 4.2

- Disable software color denoising processing by setting the denoise option to cdn_off.

- Disable the display window for nopreview to release additional CPU cycles.

- Set force_turbo=1 in /boot/firmware/config.txt to ensure that the CPU clock is not throttled during video capture. For more information, see the force_turbo documentation.

- Adjust the ISP output resolution parameter to --width 1280 --height 720 or lower to achieve the frame rate target.

- On the Raspberry Pi 4, you can overclock the GPU to improve performance by adding a frequency of gpu_freq=550 or higher in /boot/firmware/config.txt. For detailed information, please refer to the Overclocking documentation.

The following command demonstrates how to implement a 1280×720 120fps video:

rpicam-vid --level 4.2 --framerate 120 --width 1280 --height 720 --save-pts timestamp.pts -o video.264 -t 10000 --denoise cdn_off -n

- Integration of Libav with picam-vid

Rpicam-vid can encode audio and video streams using the ffmpeg/libav codec backend. You can save these streams to a file, or stream them over the network.

To enable the libav backend, pass libav to the codec option:

rpicam-vid --codec libav --libav-format avi --libav-audio --output example.avi

- UDP

To use a Raspberry Pi as a server for streaming video over UDP, use the following command, replacing the < IP -addr> placeholder with the IP address of the client or multicast address, and replacing the <port> placeholder with the port you wish to use for streaming:

rpicam-vid -t 0 --inline -o udp://<ip-addr>:<port>

Use a Raspberry Pi as a client to view video streams over UDP, using the following command, replace the <port> placeholder with the port you want to stream:

vlc udp://@:<port> :demux=h264

Alternatively, use ffplay on the client side to stream with the following command:

ffplay udp://<ip-addr-of-server>:<port> -fflags nobuffer -flags low_delay -framedrop

- TCP

Video can also be transmitted over TCP. Use Raspberry Pi as a server:

rpicam-vid -t 0 --inline --listen -o tcp://0.0.0.0:<port>

Use the Raspberry Pi as the client to view the video stream over TCP, use the following command:

vlc tcp/h264://<ip-addr-of-server>:<port>

Alternatively, use the ffplay stream at 30 frames per second on the client side with the following command:

ffplay tcp://<ip-addr-of-server>:<port> -vf "setpts=N/30" -fflags nobuffer -flags low_delay -framedrop

- RTSP

To transfer video via RTSP using VLC, using the Raspberry Pi as the server, use the following command:

rpicam-vid -t 0 --inline -o - | cvlc stream:///dev/stdin --sout '#rtp{sdp=rtsp://:8554/stream1}' :demux=h264

To view the video stream on the RTSP using the Raspberry Pi as a client, use the following command:

ffplay rtsp://<ip-addr-of-server>:8554/stream1 -vf "setpts=N/30" -fflags nobuffer -flags low_delay -framedrop

Or on the client side use the following command to stream with VLC:

vlc rtsp://<ip-addr-of-server>:8554/stream1

If you need to close the preview window on the server, use the nopreview command.

Use inline flags to enforce stream header information into each inner frame, which helps the client understand the stream when the beginning is missed.

rpicam-raw

rpicam-raw records the video directly from the sensor as the original Bayer frame. It doesn't show the preview window. To record a two-second raw clip to a file called test.raw, run the following command:

rpicam-raw -t 2000 -o test.raw

RPICAM-RAW outputs raw frames without any format information. The application prints the pixel format and image size to the terminal window to help the user parse the pixel data.

By default, rpicam-raw outputs raw frames in a single, potentially very large file. Use the segment option to direct each raw frame to a separate file, using the %05d directive to make each frame filename unique:

rpicam-raw -t 2000 --segment 1 -o test%05d.raw

Using a fast storage device, rpicam-raw can write 18MB frames from a 12-megapixel HQ camera at a speed of 10fps to the disk. rpicam-raw is unable to format the output frame as a DNG file; To do this, use the rpicam-still option at a frame rate lower than 10 to avoid frame drops:

rpicam-raw -t 5000 --width 4056 --height 3040 -o test.raw --framerate 8

For more information on the original format, see mode documentation.

rpicam-detect

Note: The Raspberry Pi operating system does not include rpicam-detect. If you already have TensorFlow Lite installed, you can build rpicam-detect. For more information, see the instructions on building rpicam-apps in build. Don't forget to pass -DENABLE_TFLITE=1 when running cmake.

rpicam-detect displays a preview window and monitors the content using a Google MobileNet v1 SSD (Single Shot Detector) neural network that has been trained to recognize about 80 classes of objects using the Coco dataset. Rpicam-detect can recognize people, cars, cats and many other objects.

Whenever rpicam-detect detects a target object, it captures a full-resolution JPEG. Then return to monitoring preview mode.

For general information about model usage, please refer to the TensorFlow Lite Object Detector section. For example, when you are out, you can keep an eye on your cat:

rpicam-detect -t 0 -o cat%04d.jpg --lores-width 400 --lores-height 300 --post-process-file object_detect_tf.json --object cat

rpicam Parameter Settings

- --help -h prints all the options, along with a brief description of each option

rpicam-hello -h

- --version outputs the version strings for libcamera and rpicam-apps

rpicam-hello --version

Example output:

rpicam-apps build: ca559f46a97a 27-09-2021 (14:10:24) libcamera build: v0.0.0+3058-c29143f7

- --list-cameras lists the cameras connected to the Raspberry Pi and their available sensor modes

rpicam-hello --list-cameras

The identifier for the sensor mode has the following form:

S<Bayer order><Bit-depth>_<Optional packing> : <Resolution list>

Cropping is specified in the native sensor pixels (even in pixel binning mode) as (<x>, <y>)/<Width>×<Height>。 (x, y) specifies the position of the width × height clipping window in the sensor array.

For example, the following output shows information for an IMX219 sensor with index 0 and an IMX477 sensor with index 1:

Available cameras

-----------------

0 : imx219 [3280x2464] (/base/soc/i2c0mux/i2c@1/imx219@10)

Modes: 'SRGGB10_CSI2P' : 640x480 [206.65 fps - (1000, 752)/1280x960 crop]

1640x1232 [41.85 fps - (0, 0)/3280x2464 crop]

1920x1080 [47.57 fps - (680, 692)/1920x1080 crop]

3280x2464 [21.19 fps - (0, 0)/3280x2464 crop]

'SRGGB8' : 640x480 [206.65 fps - (1000, 752)/1280x960 crop]

1640x1232 [41.85 fps - (0, 0)/3280x2464 crop]

1920x1080 [47.57 fps - (680, 692)/1920x1080 crop]

3280x2464 [21.19 fps - (0, 0)/3280x2464 crop]

1 : imx477 [4056x3040] (/base/soc/i2c0mux/i2c@1/imx477@1a)

Modes: 'SRGGB10_CSI2P' : 1332x990 [120.05 fps - (696, 528)/2664x1980 crop]

'SRGGB12_CSI2P' : 2028x1080 [50.03 fps - (0, 440)/4056x2160 crop]

2028x1520 [40.01 fps - (0, 0)/4056x3040 crop]

4056x3040 [10.00 fps - (0, 0)/4056x3040 crop]- --camera selects the camera you want to use. Specify an index from the list of available cameras.

rpicam-hello --list-cameras 0 rpicam-hello --list-cameras 1

- --config -c specifies a file that contains the command parameter options and values. Typically, a file named example_configuration.txt specifies options and values as key-value pairs, with each option on a separate line.

timeout=99000 verbose=

Notice: Omission of prefixes -- for parameters is typically used in command lines. For flags that are missing values, such as verbose in the example above, a trailing = must be included.

Then you can run the following command to specify a timeout of 99,000 milliseconds and detailed output:

rpicam-hello --config example_configuration.txt

- --time -t, the default delay of 5000 milliseconds

rpicam-hello -t

Specifies how long the application runs before shutting down. This applies to both the video recording and preview windows. When capturing a still image, the application displays a preview window with a timeout millisecond before outputting the captured image.

rpicam-hello -t 0

- --preview sets the position (x,y coordinates) and size (w,h dimensions) of the desktop or DRM preview window. There is no impact on the resolution or aspect ratio of the image requested from the camera

Pass the preview window dimensions in the following comma-separated form: x,y,w,h

rpicam-hello --preview 100,100,500,500

- --fullscreen -f forces the preview window to use the entire screen, with no borders or title bars. Scale the image to fit the entire screen. Values are not accepted.

rpicam-hello -f

- --qt-preview uses the Qt preview window, which consumes more resources than other options, but supports X window forwarding. Not compatible with full-screen flags. Values are not accepted.

rpicam-hello --qt-preview

- --nopreview makes the application not display a preview window. Values are not accepted.

rpicam-hello --nopreview

- --info-text

Default values: "#%frame (%fps fps) exp %exp ag %ag dg %dg"

When running in a desktop environment, set the provided string as the title of the preview window. The following image metadata substitutions are supported:

| Command | Description |

|---|---|

| %frame | Frame sequence number |

| %fps | Instantaneous frame rate |

| %exp | Shutter speed at which an image is captured, in ms |

| %ag | Image analog gain controlled by photosensitive chip |

| %dg | Image number gain controlled by ISP |

| %rg | Gain of the red component of each pixel point |

| %bg | Gain of the blue component of each pixel point |

| %focus | The corner point measure of the image, the larger the value, the clearer the image |

| %lp | Diopter of the current lens (distance in 1/meter) |

| %afstate | Autofocus status (idle, scanning, focused, failed) |

rpicam-hello --info-test "Focus measure: %focus"

- --width

- --height

Each parameter accepts a number that defines the size of the image displayed in the preview window in pixels.

For rpicam-still, rpicam-jpeg, and rpicam-vid, specify the output resolution.

For rpicam-raw, specify the original frame resolution. For a camera with a 2×2 bin readout mode, specify a resolution that is equal to or less than the bin mode to capture 2×2 bin original frames.

For rpicam-hello, there is no effect.

Record a 1080p video

rpicam-vid -o test.h264 --width 1920 --height 1080

Capture a JPEG at a resolution of 2028×1520. If used with an HQ camera, the 2×2 bin mode is used, so the original file (test. ng) contains 2028×1520 original Bayer images.

rpicam-still -r -o test.jpg --width 2028 --height 1520

- --viewfinder-width

- --viewfinder-height

Each parameter accepts a number that defines the size of the image displayed in the preview window in pixels. The size of the preview window is not affected, as the image is resized to fit. Captured still images or videos are not affected.

rpicam-still --viewfinder-width 1920 --viewfinder-height 1080

- --mode allows the camera mode to be specified in the following colon-separated format: <width>:<height>:<bit-depth>:<packing> and if the provided values do not match exactly, the system will select the closest available option for the sensor. You can use the packed(P) or unpacked(U) packaging format, which affects the format of the stored video and static images, but does not affect the format of the frames transmitted to the preview window.

Bit-depth and packing are optional. By default, Bit-depth is 12, and Packing is set to P (packed).

For information on the bit depth, resolution, and packing options available for the sensors, please refer to list-cameras.

As shown below:

- 4056:3040:12:P - 4056×3040 resolution, 12 bits/pixel, packed.

- 1632:1224:10 - 1632×1224 resolution, 10 bits/pixel.

- 2592:1944:10:U - 2592×1944 resolution, 10 bits/pixel, unpacked.

- 3264:2448 - 3264×2448 resolution.

- --viewfinder-mode is the same as the mode option, but it applies to the data passed to the preview window. For more information, see mode documentation.

- --lores-width and --lores-height

Provide a second low-resolution image stream from the camera, scaled down to a specified size. Each accepts a number to define the dimensions of a low-resolution stream (in pixels). Available in preview and video modes. Static capture is not provided. For RPICAM-vid, disable additional color denoising processing. It is useful for image analysis combined with Image post-processing.

rpicam-hello --lores-width 224 --lores-height 224

- --hflip flips the image horizontally. Values are not accepted.

rpicam-hello --hflip -t 0

- --vflip flips the image vertically. Values are not accepted.

rpicam-hello --vflip -t 0

- --rotation rotates the image extracted from the sensor. Only values of 0 or 180 are accepted.

rpicam-hello --rotation 0

- --roi crops the image extracted from the entire sensor domain. Accepts four decimal values, ranged 0 to 1, in the following format: <x>,<y>,<w>,<h>. Each of these values represents the percentage of available width and height as a decimal between 0 and 1.

These values define the following proportions:

<x>: X coordinates to skip before extracting an image

<y>: Y coordinates to skip before extracting an image

<w>: image width to extract

<h>: image height to extract

The default is 0,0,1,1 (starting with the first X coordinate and the first Y coordinate, using 100% of the image width and 100% of the image height).

Examples:

rpicam-hello --roi 0.25, 0.25, 0.5, 0.5 selects half of the total number of pixels cropped from the center of the image (skips the first 25% of the X coordinates and the first 25% of the Y coordinates, uses 50% of the total width of the image and 50% of the total height of the image).

rpicam-hello --roi 0,0,0.25,0.25 selects a quarter of the total number of pixels cropped from the top left corner of the image (skips the first 0% of the X coordinate and the first 0% of the Y coordinate, uses 25% of the width of the image and 25% of the height of the image).

- --HDR default: Off, run the camera in HDR mode. If no value is passed, it is assumed to be auto. Accept one of the following values:

- off - disables HDR.

- auto - enables HDR on supported devices. If available, use the sensor's built-in HDR mode. If the sensor does not have a built-in HDR mode, the onboard HDR mode is used (if available).

- single-exp enables HDR on supported devices. If available, use the sensor's built-in HDR mode. If the sensor does not have a built-in HDR mode, the onboard HDR mode is used (if available).

rpicam-hello --hdr

Use the onboard HDR mode, if available, even if the sensor has a built-in HDR mode. If the onboard HDR mode is not available, HDR is disabled.

Raspberry Pi 5 and higher versions of devices have the onboard HDR mode.

To check the HDR mode built into the sensor, add this option to the list of cameras.

Camera Control Options

The following options control the image processing and algorithms that affect the image quality of the camera.

- sharpness

Sets the image clarity. Values from the following ranges are accepted:

- 0.0 refers to not applying sharpening

- Values greater than 0.0 but less than 1.0 apply less sharpening than the default value

- 1.0 applies the default sharpening amount

- Values greater than 1.0 apply additional sharpening

rpicam-hello --sharpness 0.0

- contrast

Specifies the image contrast. Values from the following ranges are accepted:

- 0.0 applies minimum contrast ratio

- Values greater than 0.0 but less than 1.0 apply a contrast that is less than the default value

- 1.0 applies the default contrast ratio

- Values greater than 1.0 apply additional contrast

rpicam-hello --contrast 0.0

- brightness

Specifies the image brightness, which is added as an offset of all pixels in the output image. Values from the following ranges are accepted:

- -1.0 refers to minimum brightness (black)

- 0.0 applies standard brightness

- 1.0 applies maximum brightness (white)

For more uses, refer to ev.

rpicam-hello --brightness 1.0

- saturation

Specifies the image color saturation. Values from the following ranges are accepted:

- 0.0 applies minimum saturation (grayscale)

- Values greater than 0.0 but less than 1.0 apply saturation less than the default value

- 1.0 applies the default saturation

- Values greater than 1.0 apply additional saturation

rpicam-hello --saturation 0.6

- ev

Specifies the exposure value (EV) compensation for the image. A numeric value is accepted that is passed along the following spectrum to the target value of the automatic exposure/gain control (AEC/AGC) processing algorithm:

- -10.0 applies the minimum target value

- 0.0 applies standard target value

- 10.0 applies the maximum target value

rpicam-hello --ev 10.0

- shutter

Specifies the exposure time using the shutter, measured in microseconds. When you use this option, the gain can still be varied. If the camera's frame rate is too high, it doesn't allow the specified exposure time (for example, with a frame rate of 1 fps and an exposure time of 10,000 microseconds), the sensor will use the maximum exposure time allowed by the frame rate.

For a list of minimum and maximum shutter times for official cameras, see camera hardware documentation. Values higher than the maximum will result in undefined behavior.

rpicam-hello --shutter 10000

- gain

The effect of analoggain and gain is the same

Sets the combined analog and digital gain. When the sensor drive can provide the required gain, only analog gain is used. When the analog gain reaches its maximum, the ISP applies the digital gain. Accepts a numeric value.

For a list of analogue gain limits, for official cameras, see the camera hardware documentation.

Sometimes, digital gain can exceed 1.0 even when the analogue gain limit is not exceeded. This can occur in the following situations:

Either of the colour gains drops below 1.0, which will cause the digital gain to settle to 1.0/min(red_gain,blue_gain). This keeps the total digital gain applied to any colour channel above 1.0 to avoid discolouration artefacts.

Slight variances during Automatic Exposure/Gain Control (AEC/AGC) changes.

rpicam-hello --gain 0.8

- metering default value: centre

Sets the metering mode of the Automatic Exposure/Gain Control (AEC/AGC) algorithm. Accepts the following values:

- centre - centre weighted metering

- spot - spot metering

- average - average or whole frame metering

- custom - custom metering mode defined in the camera tuning file

For more information on defining a custom metering mode, and adjusting region weights in existing metering modes, see the Raspberry Tuning guide for the Raspberry Pi cameras and libcamera.

rpicam-hello --metering centre

- exposure

Sets the exposure profile. Changing the exposure profile should not affect the image exposure. Instead, different modes adjust gain settings to achieve the same net result. Accepts the following values:

- sport: short exposure, larger gains

- normal: normal exposure, normal gains

- long: long exposure, smaller gains

You can edit exposure profiles using tuning files. For more information, see the Tuning guide for the Raspberry Pi cameras and libcamera.

rpicam-hello --exposure sport

- awb

Sets the exposure profile. Changing the exposure profile should not affect the image exposure. Instead, different modes adjust gain settings to achieve the same final result. Accepts the following values: Available white balance modes:

| Mode | Color temperature |

|---|---|

| auto | 2500K ~ 8000K |

| incadescent | 2500K ~ 3000K |

| tungsten | 3000K ~3500K |

| fluorescent | 4000K ~ 4700K |

| indoor | 3000K ~ 5000K |

| daylight | 5500K ~ 6500 K |

| cloudy | 7000K ~ 8500K |

| custom | A custom range defined in the tuning file. |

These values are only approximate: values could vary according to the camera tuning.

No mode fully disables AWB. Instead, you can fix colour gains with awbgains.

For more information on AWB modes, including how to define a custom one, see the Tuning guide for the Raspberry Pi cameras and libcamera.

rpicam-hello --awb auto

- awbgains

Sets a fixed red and blue gain value to be used instead of an Auto White Balance (AWB) algorithm. Set non-zero values to disable AWB. Accepts comma-separated numeric input in the following format: <red_gain>,<blue_gain>

rpicam-jpeg -o test.jpg --awbgains 1.5,2.0

- denoise

Default value: auto

Sets the denoising mode. Accepts the following values:

- auto: Enables standard spatial denoise. Uses extra-fast colour denoise for video, and high-quality colour denoise for images. Enables no extra colour denoise in the preview window.

- off: Disables spatial and colour denoise.

- cdn_off: Disables colour denoise.

- cdn_fast: Uses fast colour denoise.

- cdn_fast: Uses high-quality colour denoise. Not appropriate for video/viewfinder due to reduced throughput.

- auto: Enables standard spatial denoise. Uses extra-fast colour denoise for video, and high-quality colour denoise for images. Enables no extra colour denoise in the preview window.

Even fast colour denoise can lower framerates. High quality colour denoise significantly lowers framerates.

rpicam-hello --denoise off

- tuning-file

Specifies the camera tuning file. The tuning file allows you to control many aspects of image processing, including the Automatic Exposure/Gain Control (AEC/AGC), Auto White Balance (AWB), colour shading correction, colour processing, denoising and more. Accepts a tuning file path as input. For more information about tuning files, see Tuning Files.

- autofocus-mode

Default value: default Specifies the autofocus mode. Accepts the following values:

- default: puts the camera into continuous autofocus mode unless lens-position or autofocus-on-capture override the mode to manual

- manual: does not move the lens at all unless manually configured with lens-position

- auto: only moves the lens for an autofocus sweep when the camera starts or just before capture if autofocus-on-capture is also used

- continuous: adjusts the lens position automatically as the scene changes

This option is only supported for certain camera modules.

rpicam-hello --autofocus-mode auto

- autofocus-range

Default value: normal

Specifies the autofocus range. Accepts the following values:

- normal: focuses from reasonably close to infinity

- macro: focuses only on close objects, including the closest focal distances supported by the camera

- full: focus on the entire range, from the very closest objects to infinity

This option is only supported for certain camera modules.

rpicam-hello autofocus-range normal

- autofocus-speed

Default value: normal

Specifies the autofocus speed. Accepts the following values:

- normal: changes the lens position at normal speed

- fast: changes the lens position quickly

This option is only supported for certain camera modules.

rpicam-hello --autofocus-speed normal

- autofocus-window

Specifies the autofocus window within the full field of the sensor. Accepts four decimal values, ranged 0 to 1, in the following format: <x>,<y>,<w>,<h>. Each of these values represents the percentage of available width and height as a decimal between 0 and 1.

These values define the following proportions:

<x>: X coordinates to skip before applying autofocus

<y>: Y coordinates to skip before applying autofocus

<w>:autofocus area width

<w>:autofocus area height

The default value uses the middle third of the output image in both dimensions (1/9 of the total image area).

Examples:

rpicam-hello—autofocus-window 0.25,0.25,0.5,0.5

selects exactly half of the total number of pixels cropped from the centre of the image (skips the first 25% of X coordinates, skips the first 25% of Y coordinates, uses 50% of the total image width, uses 50% of the total image height).

rpicam-hello—autofocus-window 0,0,0.25,0.25

selects exactly a quarter of the total number of pixels cropped from the top left of the image (skips the first 0% of X coordinates, skips the first 0% of Y coordinates, uses 25% of the image width, uses 25% of the image height).

This option is only supported for certain camera modules.

- lens-position

Default value: default Moves the lens to a fixed focal distance, normally given in dioptres (units of 1 / distance in metres). Accepts the following spectrum of values:

- 0.0: moves the lens to the "infinity" position

- Any other number: moves the lens to the 1 / number position. For example, the value 2.0 would focus at approximately 0.5m

- normal: move the lens to a default position which corresponds to the hyperfocal position of the lens

Lens calibration is imperfect, so different camera modules of the same model may vary.

- verbose

Alias: -v

Default value: 1 Sets the verbosity level. Accepts the following values:

- 0: no output

- 1: normal output

- 2: verbose output

rpicam-hello --verbose 1

For more details, click here for reference.

Libcamera

Since the Bullseye version, the camera driver for Raspberry Pi has switched from the original Raspicam to libcamera. libcamera is an open-source software stack (hereinafter referred to as "drivers") that provides users with more powerful features and makes it easy for third-party developers to port and create their own camera drivers.

As of December 11, 2023, the pycamera2 library has been officially provided for libcamera, making it easier for users to call the libcamera software stack through Python programs.

libcamera-hello

This is a simple "hello world" program that previews the camera and displays the camera feed on the screen.

- Usage example

libcamera-hello

This command will preview the camera on the screen for about 5 seconds, and the user can use the -t <duration> parameter to set the preview time, where the unit of <duration> is milliseconds, if it is set to 0, it will keep previewing. For example:

libcamera-hello -t 0

- Tuning file

The libcamera driver of Raspberry Pi will call a tuning file for different camera modules, and various parameters are provided in the tuning file, and when the camera is called, libcamera will call the parameters in the tuning file, and the image will be processed in combination with the algorithm and finally output into a preview screen. Since the libcamera driver can only automatically detect the chip signal, but the final display effect of the camera will be affected by the entire module, the tuning file is used to flexibly handle the cameras of different modules and adjust to improve image quality.

If the camera output image is not ideal when using the default tuning file, the user can call a custom tuning file to adjust the image. For example, if you are using the official NOIR version camera, compared to the regular Raspberry Pi Camera V2, the NOIR camera may require different white balance parameters. In such cases, you can switch by calling the tuning file.

libcamera-hello --tuning-file /usr/share/libcamera/ipa/raspberrypi/imx219_noir.json

Users can copy the default tuning file and modify it according to their needs.

Note: The use of tuning files applies to other libcamera commands, which will not be described in subsequent commands

- Preview window

Most libcamera commands will display a preview window on the screen. Users can customize the preview window's title information using the --info-text parameter. They can also call some camera parameters using %directives and display them in the window

For example, if you use HQ Camera: The focal length of the camera can be displayed on the window via --info-txe "%focus"br />

libcamera-hello --info-text "focus %focus"

Note: For more information about parameter setting, please refer to the subsequent section on command parameter settings

libcamera-jpeg

libcamera-jpeg is a simple static image capture program, unlike the complex features of libcamera-still, libcamera-jpeg code is more concise and has many of the same functions to complete image capture.

- Take a full-pixel JPEG image

libcamera-jpeg -o test.jpg

This command will display a preview serial port for about 5 seconds, and then take a full-pixel JPEG image and save it as a test.jpg

The user can set the preview time with the -t parameter, and the resolution of the captured image can be set with --width and --height. For example:

libcamera-jpeg -o test.jpg -t 2000 --width 640 --height 480

- Exposure control

All libcamera commands allow users to set shutter speed and gain, for example:

libcamera-jpeg -o test.jpg -t 2000 --shutter 20000 --gain 1.5

This command will capture an image, with an exposure of 20ms and a camera gain set to 1.5 times. The set gain parameter will prioritize adjusting the analog gain inside the photosensitive chip. If the set gain exceeds the maximum analog gain value built-in in the driver program, the system will first set the analog gain of the chip to the maximum value, and the remaining gain multiples will be implemented through digital gain.

Note: The digital gain is realized by ISP (Image Signal Processing), not directly adjusting the chip's built-in registers. Under normal circumstances, the digital gain is usually close to 1.0, unless the following three situations occur:

- Gain requirement exceeds the analog gain range: When the analog gain cannot meet the set gain requirement, digital gain will be used for compensation.< br />

- The gain of a certain color channel is less than 1: The digital gain can also be used to adjust the color gain. When the gain value of a certain color channel (such as red or blue) is less than 1, the system will apply a uniform digital gain, with a final value of 1/min (red_gain, blue_gain)< br />

- Automatic exposure/gain control (AEC/AGC) adjustment: When AEC or AGC changes, the digital gain may be adjusted accordingly to eliminate brightness changes caused by parameter fluctuations. This change usually quickly returns to the "normal value". < br />

The AEC/AGX algorithm of Raspberry Pi allows the program to specify exposure compensation, which adjusts the brightness of the image by setting the aperture value. For example:

libcamera-jpeg --ev -0.5 -o darker.jpg libcamera-jpeg --ev 0 -o normal.jpg libcamera-jpeg --ev 0.5 -o brighter.jpg

libcamera-still

libcamera-still and libcamera-jpeg are very similar in functionality, but libcamera-still inherits more of the functionality originally provided by raspistill. For example, users can still use commands similar to the following to take a picture:

- Test command

libcamera-still -o test.jpg

- Encoder

libcamea-still supports image files in different formats, it can support png and bmp encoding, and it also supports saving binary dumps of RGB or YUV pixels directly to a file without encoding or any image format. If RGB or YUV data is saved directly, the program must know the pixel arrangement of the file when reading such files.

libcamera-still -e png -o test.png libcamera-still -e bmp -o test.bmp libcamera-still -e rgb -o test.data libcamera-still -e yuv420 -o test.data

Note: The format of image saving is controlled by the -e parameter, if the -e parameter setting is not called, the format of the output file name will be saved by default.

- Raw image capture

A raw image is an image output directly from an image sensor that has not been processed by any ISP or CPU. For color camera sensors, generally speaking, the output format of the original image is Bayer. Note that the original image is different from the bit-encoded RGB and YUV images we talked about earlier, and RGB and YUV are also ISP processed images.

The command to take a raw image:

libcamera-still -r -o test.jpg

The raw image is usually saved in DNG (Adobe Digital Negative) format, which is compatible with most standard programs such as dcraw or RawTherapee. The raw image is saved as a file with the same name with a .dng extension, e.g., if you run the above command, it will be saved as test.dng and a jpeg file will be generated at the same time. DNG files contain metadata related to image acquisition, such as white balance data, ISP color matrix, etc. The following metadata encoding information is displayed with the exiftool tool:

File Name : test.dng Directory : . File Size : 24 MB File Modification Date/Time : 2021:08:17 16:36:18+01:00 File Access Date/Time : 2021:08:17 16:36:18+01:00 File Inode Change Date/Time : 2021:08:17 16:36:18+01:00 File Permissions : rw-r--r-- File Type : DNG File Type Extension : dng MIME Type : image/x-adobe-dng Exif Byte Order : Little-endian (Intel, II) Make : Raspberry Pi Camera Model Name : /base/soc/i2c0mux/i2c@1/imx477@1a Orientation : Horizontal (normal) Software : libcamera-still Subfile Type : Full-resolution Image Image Width : 4056 Image Height : 3040 Bits Per Sample : 16 Compression : Uncompressed Photometric Interpretation : Color Filter Array Samples Per Pixel : 1 Planar Configuration : Chunky CFA Repeat Pattern Dim : 2 2 CFA Pattern 2 : 2 1 1 0 Black Level Repeat Dim : 2 2 Black Level : 256 256 256 256 White Level : 4095 DNG Version : 1.1.0.0 DNG Backward Version : 1.0.0.0 Unique Camera Model : /base/soc/i2c0mux/i2c@1/imx477@1a Color Matrix 1 : 0.8545269369 -0.2382823821 -0.09044229197 -0.1890484985 1.063961506 0.1062747385 -0.01334283455 0.1440163847 0.2593136724 As Shot Neutral : 0.4754476844 1 0.413686484 Calibration Illuminant 1 : D65 Strip Offsets : 0 Strip Byte Counts : 0 Exposure Time : 1/20 ISO : 400 CFA Pattern : [Blue,Green][Green,Red] Image Size : 4056x3040 Megapixels : 12.3 Shutter Speed : 1/20

- Ultra-long exposure

If we want to take an ultra long exposure image, we need to disable AEC/AGC and white balance, otherwise these algorithms will cause the image to wait for many more frames of data as it converges. Disabling these algorithms requires setting explicit values separately, and users can skip the preview process through the -- immediate setting.

Here is the command to take an image with a 100 second exposure:

libcamera-still -o long_exposure.jpg --shutter 100000000 --gain 1 --awbgains 1,1 --immediate

Note: The maximum exposure times for several official cameras are shown in the table.

| Module | Maximum exposure time (s) |

|---|---|

| V1(OV5647) | 6 |

| V2(IMX219) | 11.76 |

| V3(IMX708) | 112 |

| HQ(IMX477) | 670 |

libcamera-vid

libcamera-vid is a video recording program that uses the Raspberry Pi's hardware H.264 encoder by default. After running this program, a preview window will be displayed on the screen, and the bitstream will be encoded and output to the specified file. For example, recording a 10 second video.

libcamera-vid -t 10000 -o test.h264

If you want to view videos, you can use VLC to play them.

vlc test.h264

Note: The recorded video stream is unpacked, users can use --save-pts to set the output timestamp, which is convenient for converting the bitstream to other video formats.

libcamera-vid -o test.h264 --save-pts timestamps.txt

If you want to output an mkv file, you can use the following command:

mkvmerge -o test.mkv --timecodes 0:timestamps.txt test.h264

- Encoder

The Raspberry Pi supports JPEG as well as YUV420 without compression and formatting:

libcamera-vid -t 10000 --codec mjpeg -o test.mjpeg libcamera-vid -t 10000 --codec yuv420 -o test.data

The --codec option sets the output format, not the extension of the output file.

The -- segment parameter can be used to segment the output file into segments (in milliseconds), which is suitable for splitting JPEG video streams into individual JPEG files with relatively short processing times (approximately 1ms).

libcamera-vid -t 10000 --codec mjpeg --segment 1 -o test%05d.jpeg

- UDP video streaming transmission

UDP can be used for video streaming, running on the Raspberry Pi server:

libcamera-vid -t 0 --inline -o udp://<ip-addr>:<port>

Where <ip-addr> needs to be replaced with the actual client IP address or multicast address.

On the client, enter the following commands to fetch and display the video stream (use one of the two commands):

vlc udp://@:<port> :demux=h264 vlc udp://@:<port> :demux=h264

Note: The port needs to be consistent with the one you set on the Raspberry Pi.

- TCP video streaming transmission

TCP can be used for video streaming, running on the Raspberry Pi server:

libcamera-vid -t 0 --inline --listen -o tcp://0.0.0.0:<port>

The client runs:

vlc tcp/h264://<ip-addr-of-server>:<port> #Just pick one of the two commands ffplay tcp://<ip-addr-of-server>:<port> -vf "setpts=N/30" -fflags nobuffer -flags low_delay -framedrop

- RTSP video streaming transmission

On Raspberry Pi, VLC is commonly used to process RTSP video streams,

libcamera-vid -t 0 --inline -o - | cvlc stream:///dev/stdin --sout '#rtp{sdp=rtsp://:8554/stream1}' :demux=h264

On the playback end, you can run any of the following commands:

vlc rtsp://<ip-addr-of-server>:8554/stream1 ffplay rtsp://<ip-addr-of-server>:8554/stream1 -vf "setpts=N/30" -fflags nobuffer -flags low_delay -framedrop

Among all preview commands, if you want to close the preview window on the Raspberry Pi, you can use the parameter - n (-- nopeview) to set it. Additionally, please note the setting of the -inline parameter, which will force the header information of the video stream to be included in every I (intra) frame. This setting allows the client to correctly parse the video stream even if the video header is lost.

- High frame rate mode

If you use the libcamera-vid command to record a high frame rate (generally higher than 60fps) and reduce frame drops, pay attention to the following points:

- Set the target level for H.264: The encoding level for H.264 needs to be set to 4.2, which can be achieved by adding the -- level 4.2 parameter.

- Turn off color noise reduction function: When recording high frame rate videos, the color noise reduction function must be turned off to reduce additional performance consumption. You can use the parameter -- denoise cdn_off to turn it off.

- Close preview window (applicable for frame rates above 100fps): If the frame rate setting exceeds 100fps, it is recommended to close the preview window to free up more CPU resources and avoid frame loss. It can be set using the parameter -n.

- Enable forced Turbo mode: Add the force_turbo=1 setting in the/boot/config.txt file to ensure that the CPU clock frequency is not limited during video recording, thereby improving performance.

- Adjusting ISP output resolution: Lowering the resolution can reduce resource usage, for example, setting the resolution to 1280x720 using the parameters - width 1280- height 720, or adjusting it to a lower resolution based on the camera model.

- Overclocking GPU (applicable to Raspberry Pi 4 or higher models): You can overclock the GPU by adding a value of gpu_freq=550 (or higher) in the/boot/config.txt file to improve performance and meet high frame rate requirements.

For example:

The following command is used to record a video with a resolution of 1280x720 and a frame rate of 120fps:

libcamera-vid --level 4.2 --framerate 120 --width 1280 --height 720 --save-pts timestamp.pts -o video.264 -t 10000 --denoise cdn_off -n

libcamera-raw

libcamera-raw is similar to a video recording program, except that libcamera-raw records data in Bayer format that is directly output by the sensor, i.e., raw image data. libcamera-raw does not show the preview window. For example, recording a 2-second raw data clip.

libcamera-raw -t 2000 -o test.raw

The program will dump the original frame directly without format information, and the program will print the pixel format and image size directly on the terminal, and the user can view the pixel data according to the output data.

By default, the program saves the original frame as a file, which is usually quite large and can be split by the --segement parameter.

libcamera-raw -t 2000 --segment 1 -o test%05d.raw

If the memory conditions are good (such as using SSD), libcamera-raw row can write official HQ Camera data (approximately 18MB per frame) to the hard drive at a speed of about 10 frames per second. To achieve this speed, the program writes raw frames that have not been formatted and cannot be saved as DNG files like libcamera-still. If you want to ensure that there is no frame loss, you can use -- framerate to reduce the frame rate.

libcamera-raw -t 5000 --width 4056 --height 3040 -o test.raw --framerate 8

General Command Setting Options

The general command setting options apply to all of libcamera's commands

--help, -h

Print the program help information, you can print the available setting options for each program command, and then exit.

--version

Print the software version, print the software version of libcamera and libcamera-app, and exit.

--list-cameras

Display the recognized supported cameras. For example:

Available cameras

-----------------

0 : imx219 [3280x2464] (/base/soc/i2c0mux/i2c@1/imx219@10)

Modes: 'SRGGB10_CSI2P' : 640x480 [206.65 fps - (1000, 752)/1280x960 crop]

1640x1232 [41.85 fps - (0, 0)/3280x2464 crop]

1920x1080 [47.57 fps - (680, 692)/1920x1080 crop]

3280x2464 [21.19 fps - (0, 0)/3280x2464 crop]

'SRGGB8' : 640x480 [206.65 fps - (1000, 752)/1280x960 crop]

1640x1232 [41.85 fps - (0, 0)/3280x2464 crop]

1920x1080 [47.57 fps - (680, 692)/1920x1080 crop]

3280x2464 [21.19 fps - (0, 0)/3280x2464 crop]

1 : imx477 [4056x3040] (/base/soc/i2c0mux/i2c@1/imx477@1a)

Modes: 'SRGGB10_CSI2P' : 1332x990 [120.05 fps - (696, 528)/2664x1980 crop]

'SRGGB12_CSI2P' : 2028x1080 [50.03 fps - (0, 440)/4056x2160 crop]

2028x1520 [40.01 fps - (0, 0)/4056x3040 crop]

4056x3040 [10.00 fps - (0, 0)/4056x3040 crop]

According to the printed information, IMX219 camera suffix 0, IM new 477 camera suffix 1. When calling the camera, the corresponding suffix can be specified.

--camera

Specify the camera, and the corresponding suffix can refer to the printing information of the command --list-camera.

For example: libcamera-hello -c config.txt

In the settings file, set parameters one by one in the format of key=value:

timeout=99000 verbose=

--config, -c

In general, we can directly set camera parameters through commands. Here, we use the -- config parameter to specify a settings file and directly read the settings parameters from the file to set the camera preview effect.

--timeout, -t

The -t option sets the running time for the libcamera program. If the command is for video recording, the timeout option sets the recording duration. If the command is for taking an image, the timeout option sets the preview time before capturing and outputting the image.

If no timeout is set when running the libcamera program, the default timeout value is 5000 (5 seconds). If the timeout is set to 0, the program will run indefinitely.

For example: libcamera-hello -t 0

--preview, -p

The -p option sets the preview window size and position (if qualified, the setting will be valid in both X and DRM versions of the window), set the format to --preview <x, y, w, h> where x and y set the position of the preview window on the display, and w and h set the width and height of the preview window.

The settings of the preview window do not affect the resolution and aspect ratio of the camera image preview. The program will zoom the preview image to the preview window and adapt it to the original image aspect ratio.

For example: libcamera-hello -p 100,100,500,500

--fullscreen, -f

The -f option sets the preview window to be displayed in full screen, and the preview window and border in full screen display mode. Like -p, it does not affect the resolution and aspect ratio, and will be automatically adapted.

For example: libcamera-still -f -o test.jpg

--qt-preview

Use a preview window based on the QT framework. Normally, this setting is not recommended because the preview program does not use zero-copy buffer sharing or GPU acceleration, which can lead to high resource consumption. The QT preview window supports X forwarding (not supported by the default preview program).